Composition Forum 40, Fall 2018

http://compositionforum.com/issue/40/

Message in a Bottle: Expert Readers, English Language Arts, and New Directions for Writing Studies

Abstract: Expert readers’ responses to texts offer specific, meaningful insights useful in building English language arts models (ELA) for student writers. In the case of academic peer review, previous research has demonstrated that expert reviewers have specific expectations involving readers, texts, and processes. Identifying congruence between research on expert readers and the design of ELA models, however, has proven elusive—and detrimental to the advancement of student learning. One promising integrative direction is the study of two complementary ELA models, one emphasizing the role of meta-reading and the other of cognition. To explore the capability of an ELA model for writing studies informed by expert reader practice, we present a case study that has educative implications for the teaching of writing. Specifically, the study reports the observations of six expert readers reviewing manuscripts for an academic journal in writing studies. Following completion of an online survey of their reading aims as they reviewed manuscripts for publication, colleagues participated in a 30-minute semi-structured recorded interview about their strategies. The interview responses were coded using both meta-reading and cognitive models. Based on analysis of 529 reviewer comments included in the analysis, the findings support conceptualization of integrated, multi-faceted ELA models. While limited, our study has generative research and classroom implications for the development of writing studies pedagogy.

Messages in bottles are used to study ocean currents; in our case, messages we gathered from expert readers have helped us plumb the nature of reading expertise in the venue of scholarly peer review and to offer an integrative perspective of reading and writing as language arts. To extend the metaphor, we have found that understanding the dynamics of these currents is complex. In fact, even the terms we use in writing about English Language Arts (ELA) are contested. While the aim of our study is straightforward—based on our findings, we offer a meta-cognitive reading model for ELA and provide details for the development of writing studies instruction derived from our model—it is useful to begin with a crosswalk between the aim of our research and the inferences we draw.

In order to study ELA in a principled fashion, we define ELA as a view of language reflected in a defined construct model—a hypothesized depiction of the variables of English language use. Broadly, such a model includes reading, writing, speaking, and listening variables as they are understood as intrapersonal, cognitive, and interpersonal domains. A moment’s pause brings us to the stunning complexity of that sentence. If we take the four variables and try to situate them within the three domains, we realize that there are twenty-four possible permutations. Faced with such a massive starting point, we focus our study on only the metacognitive domain (as part of the intrapersonal domain) and the cognitive domain in an analysis of expert readers reviewing manuscripts for an academic journal in writing studies. While we conclude by drawing inferences from our study for reading and writing instruction, we realize that much remains unsaid and under analyzed. Ours is a report from the field in which many programs of research are taking place that have a common aim: To advance opportunities for student learning through empirical research focusing on expertise.

Before we begin, however, it is important to establish why we have chosen to study professional expertise, especially when the study of student writing processes has been famously demonstrated by Linda Flower in her social cognitive theory of writing. First, as Harry Collins and Robert Evans have noted in Rethinking Expertise, the study of expertise is a social process—“a matter of socialization into the practices of an expert group” (3). Second, this socialization involves tacit knowledge—the deep understanding one can only gain through social immersion in groups who possess it” (6). Third, this deep understanding involved meta-expertise involving a range of perspectives, from technical connoisseurship involving highly detailed decisions to the transfer of expertise across knowledge domains. So, as the literature on expertise reveals, the more we understand the processes and genres that experts use, the better we are able to socialize our students into becoming writers. However, the perspective of expertise should be adopted with a recognition of limits that may not readily apply to students. To identify areas of commonality and disjuncture, we can turn to How Students Learn, a report of the National Research Council. As the committee notes, key principles are in play as students encounter new information: students have preconceptions about how the world works and if these initial understanding are not engaged, new concepts may not be grasped; students develop competency through the organization of factual knowledge; and students benefit from a metacognitive approach to learning by defining their own goals and monitoring their success in achieving them. Therefore, while this study is devoted to using expertise models, we begin by inviting readers to interpret our findings within this framework: while the socialization of our students into seeing themselves as members of a writing community it important, that process must be informed by the preconceptions they have when they enter our classroom; while we want to make the tacit knowledge of experts explicit to students, they may be challenged by organizing this knowledge; and, while range of meta-expertise is involved in working as a professional, this expertise will be gained through a process of acculturation in which individual learning goals (such as the ability to understand a given writing task) and self-efficacy processes (such as the ability to feel confident in achieving that goal) are starting places. In the case study that follows, we therefore believe that we have found something valuable that occurs when expertise is examined and when students are kept at the center of our efforts.

As to design, the present study examines peer-review reading patterns of six members of the editorial board of a United States academic journal in the field of Rhetoric and Composition/Writing Studies (Phelps and Ackerman, hereafter referenced as writing studies). Part of a program of research involving expert readers (Horning), we begin the present study with a literature review in which we examine important research in two areas: empirical research focusing on meta-cognition and its applications to reading and theoretical research focusing on cognitive modeling with reading applications. We hold that metacognitive modeling (the capability of reflecting on purposeful choices) and cognitive modeling (the capability of making those choices in the first place) are interwoven in language and, hence, must be understood in terms of each other. While it is beyond the scope of the present study to determine precisely why meta-cognition and cognition have been isolated as discrete processes, one usual suspect is timed writing assessment. Used in high stakes situations in which admission, placement, and progression decisions are made, timed writing assessment promotes the view that only that which can be measured under standardized, timed conditions is significant—and all else is marginalized (Poe, et al.). Recent perspectives, however, have demonstrated that metacognitive and cognitive modeling, understood as in resonance with each other, help us understand how professional socialization is understood at emergent levels. These models also suggest how tacit knowledge is made explicit and integrated into learning processes and how learning goals and self-efficacy processes are understood as entry points for the development of meta-expertise (Lieu, et al.; MacArthur and Graham).

We then turn to our case study of the peer-review reading patterns of editorial board members of a writing studies journal. Based on our study, we offer a meta-cognitive reading model for ELA and provide details for the development of writing studies instruction derived from our model.

Literature Review: In Search of Integration

In this study, we define meta-reading awareness as the ability to reflect on one’s reading processes and make observations about those processes and cognitive strategies as mental processes of reasoning and memory accompanied by reading behaviors. Expert readers in the purposive sample in the study are of special interest in that they possess a specific set of awarenesses and strategies for effective reading of extended nonfiction prose; these are needed skills that can and should be taught within the interpretative framework we provide above of situational socialization, tacit knowledge organization, and acculturated professionalism. Our study demonstrates that key aspects of readers’ meta-awareness and cognitive skills play an essential role in the specific type of scholarly and evaluative reading involved in reviewing for an academic journal. In turn, these skills are important to the development of language arts models that include writing. Coming to terms with the dynamics of reading and writing interactions is the complex work of this study.

As we noted above, those interactions are best defined as English language arts (ELA)—an old and distinguished tradition that deserves our attention. In terms of origin, we note Burke Aaron Hinsdale’s 1896 publication of Teaching the Language Arts: Speech, Reading, and Composition as a milestone event. A former school superintendent in Cleveland, Hinsdale was drawn to a unified concept of instruction in speech, reading, language, composition, and literature. Describing this concept in terms of “the correlation of the several lines of teaching” (xix), he envisioned a continuous curriculum “unlimited by grade lines” in which “principles, fact, theory, and science must, in the long run, govern and control all practical applications” (xx).

If we seek the evidence of the endurance of the vision proposed by Hinsdale, we need look no further than the November 2016 issue of Research in the Teaching of English. Under the special issue title Defining and Doing ‘English Language Arts’ in Twenty-First Century Classrooms and Teacher Education Programs, the authors took up questions of ELA in terms of pluralism, multilingualism, and social constructionism (Juzwik, et al.). Critical of heteronormativity and technological determinism, the editors and authors implicitly called for increased metacognition—“the ability to reflect on one’s own learning and make adjustments accordingly” (National Research Council, Educating for Life p. 4)—on the part of students and their instructors to share in evaluation practices. With a firm basis in elementary education (Graham, et al., Teaching Elementary) and secondary education (Graham, et al., Teaching Secondary)—and additional force given by the Common Core State Standards Initiative in 2010—ELA has been equally present in the Framework for Success in Postsecondary Writing (Council of Writing Program Administrators, National Council of Teachers of English, and National Writing Project).

Clearly, the ELA model has had important structural implications for research-based instruction in the transition between secondary education and college, as well as for the development of first-year curricula. In terms of conceptual implications, the model reminds us of the importance of the contextually bound nature of language, especially in the study of what Donald J. Lieu and his colleagues have called a New Literacy Lens in which conceptual knowledge about the nature of ELA (what it means to be an effective communicator) is combined with metacognitive knowledge about problem solving (what it means to have a working knowledge of self-regulation and self-efficacy in the communication process).

Notably, this call for meta-awareness—part of the intrapersonal domain of writing as related to self-regulation and self-efficacy—has been taken up by Crystal VanKooten in her proposal for new directions in writing studies. Drawing on a case study of six students in first-year writing courses, she identifies four concepts—process, techniques, rhetoric, and intercomparativity—through which specific metacognitive moves can be observed. These concepts provide a framework for meta-awareness that may be used to expand the body of knowledge regarding early post-secondary writing. “A more robust theorization of meta-awareness about composition and a more specific mapping of its components,” she concludes, “have the potential to benefit not only our work as teachers, scholars, and researchers, but the work of our students as they learn to communicate and to compose in a rapidly changing world.” Although not explicitly advocated, VanKooten’s research opens the door for an ELA framework that emphasizes both meta-cognition and cognition. Without attention to student ability to reflect on one’s own learning and make appropriate adjustments, ELA will drift toward a discrete skill-based model. Without attention to the mental processes of reasoning and memory, ELA will become overly concerned with reflection and fail to address skills. As our literature review demonstrates, these siloed approaches to research serve students best when integrated.

The Nature of Expertise: Meta-Cognitive Modeling for Reading

Expert reader models provide helpful analyses of what good readers do when they are reading. In order to fully understand reading processes, such bottom-up, empirical research holds that it is essential to study actual readers actually reading. Research by Terje Hillesund, for example, focuses on how readers respond to conventional as opposed to digital texts of various kinds. Asking about their different strategies for reading and researching materials of various kinds, Hillesund interviewed ten scholars in the humanities and social sciences. He distinguishes among three different kinds of reading: sustained, discontinuous, and immersive. Sustained reading is the typical approach used for reading novels, while discontinuous reading is the kind of skimming and scanning these readers use to find and browse through research materials, usually found through online searching. Immersive reading is the reading these academics do when they have found research materials they want to focus on for scholarly purposes.

In the tradition of Hillesund, Mark Ware and Mike Monkman conducted a large study of international journal reviewing, surveying authors, reviewers, and editors engaged in peer review of articles for journals in the international Thomson Scientific database. Their main findings were that peer review improves overall quality of published work, although the process could be improved by consistent use of a double-blind process. Identifying the ways that expert readers construct their ideas about authors and double-blind processes, Christine W. Tardy and Paul Kei Matsuda have examined the deeply situated, rhetorical shaping of the author identity by editorial board members of journals in Writing Studies. Expert readers do think about the authors of articles they review according to Tardy and Matsuda; their insights may also be understood as a list of reading strategies ranging from depth of knowledge to use of citation style.

Additional insight about the way experts read relates to the way writers convey their stance or position on a topic as well as how this stance is conveyed. This issue has been studied by Hong Kong Centre for Applied Language Studies scholars Ken Hyland and Feng Jiang. Writers’ stance is related both to their own responses to their topic and claims, and also to the degree of objectivity they impose (1-4). These issues come to the fore when expert readers evaluate the writers’ articles. Writers’ stances, then, play into their view of audience. As Hyland and Jiang observe,

any successfully published research article anticipates a reader’s response and itself responds to a larger discourse already in progress. Stance choices are, in other words, disciplinary practices as much as individual positions. Because writers comment on their propositions and shape their texts to the expectations of different audiences, the expression of stance varies according to discipline. (5)

In other words, writers shape texts to the expectations of expert readers—as Walter Ong famously observed, creating their audiences as they go. The more writers understand this central principle, we might imagine, the more likely they are to carry it across their courses as they adapt genre to audience. Significant, therefore, is the concept of transfer across disciplines, a topic of central importance to Writing Studies (Beaufort, College Writing, College Writing; Bergmann and Zepernick; Donanue and Foster-Johnson; Frazier; Jarratt et al.; Nelms and Dively; Yancey, Robertson, and Taczak).

In sum, study of expert readers can reveal useful information for writers if they are asked about how they read certain kinds of texts and how they respond to them. Internationally-based studies of expert readers (Hillesund; Lamont; Ware and Monkman) as well as those based in the United States (Horning; Sword) show that writers, readers, and editors of journal articles and academic research proposals have specific strategies for reading academic writing. These expectations are captured by a theory of expert meta-reading (Horning, 2012). The theory proposes that meta-readers have three kinds of awareness (meta-contextual, meta-linguistic, and meta-textual). In addition to the three awarenesses, experts have four skills that distinguish them from novices (analysis, synthesis, evaluation, and application). These characteristics are captured by the notion that expert readers are meta-readers who draw on their tacit knowledge and experience before, during, and after reading texts for meaning and use.

The Nature of Theory: Cognitive Modeling for Reading

In order to fully understand reading processes, this top-down, deductive research approach holds that it is essential to begin with broad reasoning. Such reading models allow researchers to identify predictor (independent) variables that, when modeled correctly, result in outcome (dependent) variables leading to reading comprehension. (Synonymous with traits, variables are those elements associated with defined constructs). As this present study demonstrates, the field of Writing Studies is in need of variable models that embrace both reading and writing in ELA.

The origin for contemporary reading models is generally identified with research by the Rand Reading Study Group in their 2002 report Reading for Understanding: Toward an R&D Program in Reading Comprehension. Considering their work as heuristic, the Rand authors defined reading comprehension as “the process of simultaneously extracting and constructing meaning through interaction and involvement with written language” (xii). The model contains three elements—the reader, the text, and the purpose for reading; each may be understood as rhetorical strategies that inform rhetorical awareness and therefore ELA proficiency. In experimenting with interactions of these three variables as they function in sociocultural contexts, the Rand authors imagined they could identify reading strategies that would help students to become proficient readers: those capable of acquiring new knowledge, understanding new concepts, applying textual information appropriately, demonstrating engagement in the reading process, and reflecting on the text being read.

In 2009, this program of research was taken up by researchers at the Educational Testing Service. Tenaha O’Reilly and Kathleen M. Sheehan developed a rationale and proposed a research base for a cognitively-based reading competency model. Working with a three-stage model of prerequisite reading skill, model building skill, and applied comprehension, O’Reilly and Sheehan proposed seven cognitive principles that, taken together, would produce the proficient readers identified by the Rand researchers: realistic reading purpose; integration and synthesis of information from multiple related texts; query and answer complex questions; extract discourse structure; measure component skills; measure fundamental skills; and read in digital environments.

Integration

Emphasis on meta-awareness is a way to integrate conceptually empirical and theoretical research approaches, to conceptualize findings from both inductive and theoretical research, and to integrate metacognitive and cognitive modeling taking up the need for integrated models, Educational Testing Service researchers Randy E. Bennett, Paul Deane, and Peter W. van Rijn turn to the literature of expertise to expand the concept of ELA. As they write, “The literature on the growth of expertise suggests that conceptual development can play a key role in skills development through an intervening variable—metacognitive awareness” (85). Because metacognitive awareness provides students with the ability to scaffold skills in reading as well as writing, this unification through metacognitive awareness allows a new way forward. As Bennett, Deane, and van Rijn propose, it is useful to target key practices, defined as “integrated bundles of reading, writing, and thinking skills that are required to participate in specific, meaningful modes of interaction with other members of a literate community” (86). This integrated model ushers in a new era for ELA.

Recent theoretical and empirical scholarship related to meta-reading and cognitive modeling informs our generative research of expert writing studies readers in four precise ways. First, as to design, we are not bound either in our research design or reporting structure by taxonomies that fail to capture how language actually works in situated contexts. As we demonstrate, we are open to interrelationships among reading, writing, speaking, and listening variables and do not seek to use restrictive domain models for the purpose of interpretive convenience. Second, as to method, our emphasis on stated research questions, purposive sampling of experts, and multi-method data collection afforded a variety of ways to capture the complex phenomenon of language arts we were examining. Third, as to our findings, we were able to identify distinct patterns of meta-contextual awareness and meta-evaluative awareness from the meta-reading model, as well as process and audience from the cognitive model—patterns that helped us establish a study-based emerging reading model for English Language Arts. This model may then be examined and refined by other researchers interested in theoretical and empirical scholarship. Fourth, the study contributions allow considerations for interrelated research implications and pedagogical heuristics that, along with the model, may be used for further study.

We now turn to our study.

Method: Research Questions, Study Sample, and Data Collection

Recent attention to massive data analysis in Writing Studies holds the potential to disenfranchise researchers interested in small sample sizes best examined by descriptive statistics and qualitative analysis (Moxley, et al.). To carve out a place of such research in an era of big data, we turn to our methods in some detail in terms of our research questions, our emphasis on generative design, our sampling plan design, and our data collection techniques.

Research Questions

Based on our literature review, we developed five research questions:

- Could a survey of reading aims be developed that provides valid information about cognitive variables and their importance to our expert reviewers?

- Then, using a defined meta-cognitive model and a defined cognitive model, could we reliably identify the presence of variables in interviews with expert reviewers?

- Under analysis, what do these variables reveal about prevalent meta-cognitive and cognitive variables?

- Based on this analysis, could we then offer a variable model of reading useful to those using a language arts orientation to writing studies?

- Finally, drawn from the model, could we offer a preliminary list of research implications and pedagogical heuristics, which would be useful to instructors?

As part of our program of research, these questions flow directly from the literature review and the need for integration of competing research traditions. As noted, emphasis on meta-cognition provides an especially promising gloss that allows us to learn about cognitive processes. Taken in terms of each other, meta-cognitive and cognitive processes foil the binary that too often separates reading and writing research and practice. Answers to these questions are used to structure the results of our analysis.

Study Sample

Our sampling plan is classified as a non-probability, purposive sample. Such samples are ideal for labor-intensive, in-depth studies involving only a few cases. As a purposive sample, we established inclusion criteria for our key informants and identified participants. Such a design is ideal for pilot studies such as ours that require intensive analysis of information from difficult-to-find populations (Bernard 162-178).

All six expert readers in our study were members of the editorial board of a journal in Writing Studies. After informed consent, participants completed an online survey about their reading behavior (and some demographic information) and then responded to questions in a semi-structured recorded telephone interview with Alice Horning. Participants were selected from among an editorial board of twenty-two members to represent different ages, genders, racial and ethnic categories, and types of institutions at which they work. The study was reviewed and approved by IRBs at two of the lead authors’ institutions.

All participants identified with rhetoric and composition as their field of study; all reviewed for other journals in their field, some reading for as many as ten other journals and others for as few as four per year, and all but two served on the editorial boards of other journals in their field. In their editorial board roles, the experts reviewed in the following areas of writing studies: assessment; curriculum; history; outreach; professional advancement; program design; relationships between writing programs and the public they serve; roles of technology in instruction; theory; and writing across the curriculum, writing in the disciplines, and electronic communication across the curriculum initiatives. Thus, in terms of inclusion criteria, our respondents qualify as experts on the meta-criteria identified by Harry Collins and Robert Evans, for a sampling plan involving experts: all have appropriate credentials, demonstrated experience, and a proven track record of accomplishments.

Data Collection

To draw on these disparate ideas about the expert reading of complex texts, we turn to two methods, both involving models. The first, a meta-reading model, is based on scholarship by Alice Horning reported in Reading, Writing and Digitizing: Understanding Literacy in the Electronic Age. This model is designed to capture the ability to reflect on one’s reading processes and make observations about those processes. The second model, a cognitive strategy model, is based on research by O’Reilly and Sheehan. The elements of both initial models are shown in Table 2. These definitions allow the constructs in both models to be targeted for examination using surveys and coded qualitative data from our expert academic readers.

Once participants had signed the informed consent document, they were sent a link to the online survey. The questions from these surveys are shown below in Table 1. The survey questions were designed to see if these readers made use of the twelve targeted variables common to both the meta-reading and cognitive model.

Once the survey was completed, Horning arranged a 30 minute semi-structured telephone interview with each participant. The nine questions in the phone interview provided below were designed to prompt our experts to comment on both the meta-reading model and the cognitive model.

- Everyone in the study responded to the online survey by saying that journal audience is an important consideration. Can you describe your perception of the most common reader and the strategies you use to determine if this reader is to be served by the articles at hand that you are reviewing?

- On the survey, we asked about whether and to what extent you consider the author’s purpose. Can you tell me how you think about this matter as you read an article?

- How important is the context of the topic in the field?

- What is your first step in completing reviews of articles for the journal?

- What steps do you follow as you read the article?

- Do you read holistically?

- How do you decide your judgment of accept, revise, reject?

- Describe the role of your background knowledge of the discipline and topic of the article.

- Do you have any further thoughts you would like us to know about your role as a reviewer of manuscripts for the journal?

To ensure that the reflective questions were based on actual manuscripts—that is, so that the interviews were discourse based in order to explore tacit knowledge of our experts (Odell, Goswami, and Herrington)—participants received articles they had reviewed previously and their reviews of those papers.{1} Telephone interviews were recorded when possible using Elluminate software. Following the interviews, a student created transcripts of each. (In two cases, the Elluminate system did not function correctly or at all, so detailed notes were used to create a transcript and sent to the participant for review and correction as needed.) Each transcript was then broken into individual sentence units and put into an Excel spreadsheet.

Following transcription of the telephone interviews, key phrases were identified as nodes—distinct words or phrases that captured concepts revealing the reading patterns of those interviewed. These category names, as Anselm Strauss and Juliet Corbin have defined them, arise from the pool of concepts associated with the construct of reading as presented in the two models. As Tehmina Basit has noted, coding at the nodes provides a way to organize and analyze qualitative information. Procedurally, two of the authors coded the data at the nodes for the variables in both the meta-reading and cognitive models. Because reliability is a prerequisite to validity in the interpretative model used in this study, only those comments on which the judges agreed in the coding were examined for inferential analysis. In other words, to validate the expertise model shown in Figure 1, we began with an analysis of reliability.

Results

Table 1 shows the online survey items and distributions of Likert scale responses of the experts in terms of the cognitive model.

Table 1. Survey responses (n = 6)

| Cognitive Variable | Question | Response (Percent) | |||||

|---|---|---|---|---|---|---|---|

| VSA | SA | A | D | SD | VSD | ||

| Audience | When I start to read an article for the journal, I consider the journal audience. |

4 (67%) |

2 (33%) |

— | — | — | — |

| Purpose | When I start to read an article for the journal, I consider the author’s purpose. |

1 (17%) |

1 (17%) |

4 (67%) |

— | — | — |

| Topic | When I start to read an article for the journal, I consider the context of the topic in the field. |

3 (50%) |

2 (33%) |

1 (17%) |

— | — | — |

| Length | I look at the length of the article before I read it. |

1 (17%) |

1 (17%) |

— |

1 (17%) |

2 (33%) |

1 (17%) |

| Structure | I pay careful attention to the structure of the piece. |

4 (67%) |

— |

2 (33%) |

— | — | — |

| Style | I consider whether the article is suited to the readership of the journal. |

4 (67%) |

2 (33%) |

— | — | — | — |

| Process | N/A | — | — | — | — | — | — |

| Scholarly Integration | The literature review is very important. | — |

5 (83%) |

1 (17%) |

— | — | — |

| Methodological Selection | I evaluate the appropriateness of the method for the question under study. |

1 (17%) |

3 (50%) |

2 (33%) |

— | — | — |

| Validated Claims | I compare the claims made to the data provided. |

3 (50%) |

2 (33%) |

1 (17%) |

— | — | — |

| Topic Specific Vocabulary | I evaluate the language/word usage of the article. | — |

2 (33%) |

3 (50%) |

1 (17%) |

— | — |

| Time | I spend at least an hour or more on a review, on average. |

4 (67%) |

2 (33%) |

— | — | — | — |

Regarding the variables of audience, purpose, topic, structure, style, scholarly integration, methodological selection, validated claims, and time, the participants very strongly agreed (VSA), strongly agreed (SA), or agreed (A) that these variables were among their aims when engaged in peer review. Regarding the variable of topic specific vocabulary, the range of scores broadened to include one expert who disagreed (D) that this was an aim of reading. The largest dispersion was found in the variable of length, in which scores ranged from very strongly agree to disagree (D). Because the variable of reading process was too complex to be captured in a single question, the survey did not request information about that variable; rather, participants’ reading process was covered in the phone interviews.

In terms of coding shown in Table 2, 529 nodes were identified, ranging from 77 nodes coded from one expert’s transcript to 128 nodes on another.{2}

Table 2. Descriptive Statistics for Matching Codes (n = 529)

| Frequency | Percent | |

|---|---|---|

| Model 1: Meta-reading model | ||

| Meta-contextual: Conversational setting of research | 62 | 11.7 |

| Meta-evaluative: Judgment of the value of research | 25 | 4.7 |

| Meta-linguistic: Writerly conventions of research | 12 | 2.3 |

| Meta-textual: Identification of text structure | 11 | 2.1 |

| Meta-analytic: Exposition of research | 10 | 2.5 |

| Meta-synthesis: Integration of present research into field | No match | N/A |

| Meta-application: Envision use of research | No match | N/A |

| Total Coded | 120 | 23 |

| Could Not Code | 409 | 77 |

| Model 2: Cognitive Model | ||

| Process: Explanation of protocols | 132 | 25 |

| Audience: Identification of readers of research | 61 | 11.5 |

| Scholarly integration: Integration of literature review into research | 33 | 6.2 |

| Purpose: Aim of research | 16 | 3 |

| Topic: Category of research | 8 | 1.5 |

| Style: Knowledge of conventions | 7 | 1.3 |

| Structure: Cohesion of research | 5 | .9 |

| Time: Reader commitment of review of research | 5 | .9 |

| Methodological selection: Choice of protocols for research | 3 | .6 |

| Topic specific vocabulary: Use of specialist knowledge in research | 1 | .2 |

| Length: Exposition of research | No match | N/A |

| Validated claims: Chain of causal logic in research | No match | N/A |

| Total Coded | 271 | 51.2 |

| Could Not Code | 258 | 48.8 |

Of the entire 529 nodes recorded, the judges could not reach agreement on 409 (77%) of those associated with the meta-reading model, while 120 (23%) could be coded. Of the 529 nodes recorded, the judges could not reach agreement on 258 (49%) of those associated with the cognitive model, while 271 (51%) could be coded. We then examined the reliability of the coding using a Kappa statistic for those nodes that could be identified and therefore coded (Carletta). For the meta-reading model, direct agreement between the judges was .14 (p < .001). For the cognitive model, direct agreement between the judges was .63 (p < .001). While statistically significant in both cases, we interpret the low correlations from the meta-reading model as an indicator of the difficulty of identifying tacit knowledge related to the ability to reflect on one’s own learning in a transcript. Conversely, the moderate correlation in the cognitive model is an indicator of the more straightforward mental processes accompanying expertise.

Table 2 also shows the breakdown of the comments from the telephone interviews by model. These are the comments that were used for the analysis. For the meta-reading model, synthesis and application were withdrawn because they could not be identified in the data; for the cognitive model, length and validated claims were withdrawn because they could not be identified in the data. In the meta-reading model, codes ranged from the most frequent regarding the meta-contextual variable (11.7%) to the least frequently coded (2.5%) regarding the meta-textual variable. In the cognitive model, codes ranged from the most frequent regarding process (25%) to the least frequent regarding topic-specific vocabulary (.2 %).

A closer review of the data revealed distinct patterns on four specific variables: meta-contextual awareness and meta-evaluative awareness from the meta-reading model, and process and audience from the cognitive model. These patterns are associated with the top two variables receiving the highest level of agreement shown in Table 2.

Meta-contextual awareness. The experts’ comments notably reveal their sense of meta-contextual awareness. One of these readers worked for a number of years in a community college setting and considers herself an outsider because of this experience; as such, she believes she has the distance necessary to reflect on real world applications of the research she is evaluating. The other works in a different scholarly area than is the focus of the journal, though her position entails directing a writing program, so she has practical experience that allows her to evaluate articles appropriately. She says this about her reading: “It is easier to see that the emperor has no clothes for me because I have the distance of reading from outside.” Reading from the borderlands perspective has, for this reader, value.

In terms of context, the majority of our experts offered comments that reflect their meta-contextual awareness of the field of writing studies. As they read, they reported that they consider carefully the way an article positions itself within the field, the other work that is cited, and the writer’s knowledge of the field. As reflected in this comment, these matters definitely influence experts’ judgments: “I look to see what sources are there and then look to see if the writer has proven that these new sources are appropriate and useful.” Reader persona, awareness of research trends, and integration of sources are each meta-contextual variables of the expert reading process.

Meta-evaluative awareness. Defined as the way that readers render judgments based on issues of authority, accuracy, currency, relevancy, appropriateness, and bias, meta-evaluative awareness is at the core of decisions. As we know from the decision science research of Amos Tversky and Daniel Kahneman, the judgmental process is complex and often rests more on heuristic reasoning than elementary claims. Their system of heuristic and bias research they explored during their careers is too complex for discussion except for its core concept of dual reasoning. Explaining research for which he was awarded the Nobel Prize in Economic Sciences, Kahneman (98) observes that heuristics are a consequence of intuition (termed System 1 thinking) and strategy (the corrective System 2).

Such dual systems of thought are evident in the observations of our experts. As one respondent noted, “It wasn’t that the writer was on the complete wrong track, it was just I had enough objections as someone who also understands the literature on what they were talking about, that I felt like this really needed to be dealt with.” Here we see evidence of both intuition (the researcher seems to have presented an accurate account of the literature) and strategy (yet some bias is evident in interpretation and must be established in the review). Throughout the expert comments, both intuition (“Because I think even as I’m reading it and I sort of have this reaction to it, that I feel like, ‘okay, this is a piece that is really ready to go’ or ‘this is a piece that is so not ready to go’”) and strategy (“If it appears weak, I read with more attention and care because I think the writer will need more advice and information than does the writer of a stronger piece”). Careful attention to the comments of our experts reveal the complexity of the evaluative process—and offers a window on the interrelated process of heuristic and strategy involved in passing summative judgment on a manuscript.

Process. Experts responded to our interview questions with discussion of or allusions to their own cognitive protocols as they engaged with an article for review purposes. In general, process comments make clear how readers work through the article and the strategies they use to assess whether the article is suitable for the journal.

In terms of reviewing task process for the target journal, the context here is important. In the target journal for the present study, expert readers are asked in the review process to make publication recommendations, offer confidential comments to the editors that are not shared with the writer, and provide a written response to the author(s) in which the publication recommendation is justified. Because all of the experts are writing teachers, their responses were generally at least a page or two of fairly detailed feedback to the author. In addition, the editorial board met regularly at two national meetings for a discussion of an article that all members had read (with author permission), and to consider other issues relevant to the running of the journal.

Hence, the review process is quite defined. It is therefore interesting that the experts all follow similar processes: multiple readings of an article; note-taking of some kind; and the composition of a written response, with or without separate comments to the editor. Replicating the findings of Tardy and Matsuda, we found that at least one expert does try to figure out who the author might be, but this speculation is not a key factor in their judgment of a piece. Most read optimistically, trusting that the editors only send out articles that have some merit. A comment that supports this kind of reading, even if the piece is not acceptable, is this: “I’ll say, you know, ‘This little piece is really promising and you should build a new essay off of this.’” Most readers report thinking reflectively about their judgments, finding both the response form provided by the editors and the meetings at national conferences helpful to the review process. This uniformity of process may, however, be related to the defined reviewing task; as such, it is worth considering the impact of task on process.

Audience. As noted in Table 2, audience is defined for the purposes of our analysis as likely readers of the journal for which they are reviewing. As one reviewer noted, “I try to think of who might be in the room at our conference listening to this paper” and read from that frame or for those participants as an audience.

These readers, however, constructed audience in a variety of ways. Some constructed the audience as individuals known to the reader, some as members of the organization, and some as themselves as either typical or atypical readers. The community college writing program administrator (WPA) reader and one other considered themselves atypical, but noted that they then tried to read from a broader perspective; these reviewers described their own positions in relation to what they view as the WPA audience—one, for example, seeing herself as “outside the core of the discipline” which she views as an advantage because it lets her be “more objective.” Whether outside the discipline or holding the view that, because of expertise, the reviewer can be the typical reader, there is much that speaks to the nature of the reader’s mental construction of audience. The transcripts suggest that “real-world” experience of the audience is critical for the reviewers to be able to take the role of the audience for the purpose of evaluation; that is, the audience is “mired” (as one said) in embodied experience.

Qualification of variable model analysis. On the basis of the inter-coder agreement shown in Table 2, we have provided the above analysis of four variables. However, it is important to note that each of the variables shown—with the exception of those who received no matching code from our two readers—were present in the comments of our experts. As such, it should not be inferred that matching codes are evidence of hierarchy.

For example, the cognitive variable of purpose was reliably identified only 16 times in the coding. Interpretatively, however, this variable is very important in our understanding of expert readers. In broad terms, experience tells us that authors in the field of Writing Studies explain the origin of their work, categorize the research design, and address pedagogical implications of their results. Within this general framework, the coded data shows clearly that our expert reviewers think an explicit statement of purpose is needed. One reviewer noted specifically that he would stop reading without a clear sense of the purpose of the article, and would think carefully about what to say in his review about this issue as a problem. Another told us that if the stated purpose of the article was not aligned to the journal readership, he would reject it on that basis alone. As is the case with audience, stated purpose matters to reviewers; alignment of purpose to journal further influences the reading process. There are fewer matching codes for purpose, but the variable is no less relevant than the others in the model.

In future research, corpus linguistics may allow researchers to extend and improve the hand coding used in the present study. As Doug Irving has written, new analytic platforms such as RAND-Lex are capable of lexical analysis (presence of key terms), sentiment analysis (attitudes toward those key terms), topic modeling (thematic analysis of terms), and automated classification (based on human supervised coding of the kind we have done in this study). Hence, each of the meta-cognitive and cognitive variables presented in Figure 1 should be taken as an important finding of this present study and a preliminary comparative target for future studies.

Implications for Research

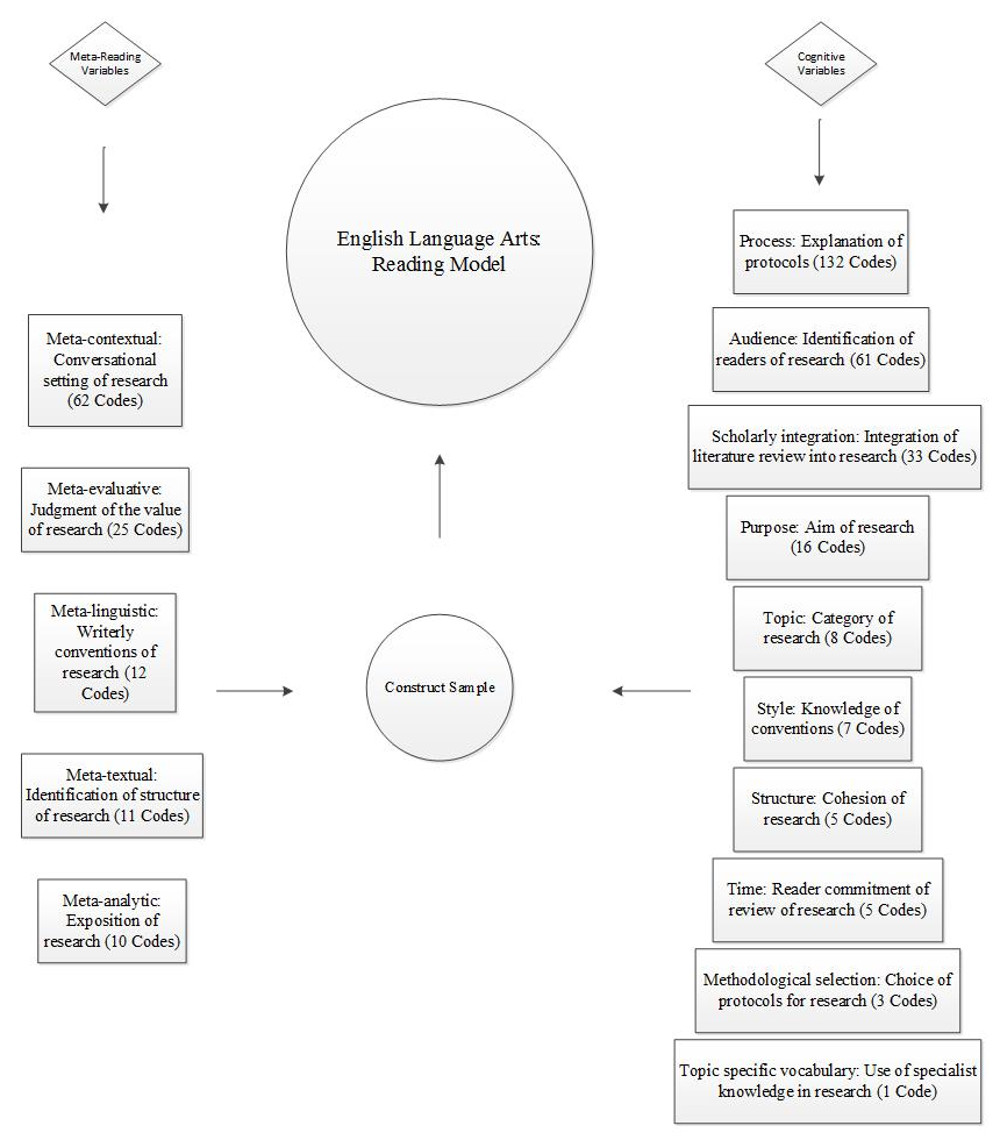

We began our study with an investigation of two models: one capturing the variables of meta-reading awareness (the ability to reflect on one’s reading processes and make observations about those processes); and the other capturing cognitive strategies (mental processes accompanied by reading behaviors). Based on these models, we discarded variables from both models that were not able to be coded reliably. Our proposed expertise model, shown in Figure 1, blends the two models with which we began.

Figure 1. Emerging Reading Model for English Language Arts

Essentially a taxonomy, Figure 1 presents a principled ELA expertise model that can be used heuristically to build theory and identify practices associated with a broadened view of writing. On the left of the figure we identify variables of the meta-reading model that may be thought of as targets of theory and instruction: meta-contextual (conversational setting); meta-evaluative (value judgement); meta-linguistic (writerly conventions); meta-textual (structural identification); and meta-analytic (exposition). Then together, these five variables are aimed at fostering the ability of writers to reflect on their reading processes and articulate observations about those processes. Admittedly, these meta-cognitive processes may be as challenging to teach as they are to observe; as such, these processes will require their own innovative pedagogies designed to improve student reflection. On the right we identify the variables of the cognitive model that we also offer as theoretical and instructional targets: process (protocols); audience identification (readers); scholarly integration (literature review); purpose (aim); topic (category); style (conventions); structure (cohesion); time (commitment); methodological selection (protocol choice); and topic-specific vocabulary (specialist knowledge). The ten variables are aimed at the knowledge, processes, skills, efficacy that our experts identify as part of their reading processes. These cognitive processes are many and complex and, as is the case with the meta-cognitive variables, will require new integrative pedagogies associated with introducing language arts models to students in our classrooms.

We have drawn the figure to emphasize that our findings are relevant in terms of the construct sample drawn from this study of experts and will require further empirical studies to become part of a larger program of research needed to build a comprehensive ELA model grounded in expertise. We believe that such models are needed. As Arthur N. Applebee has noted in terms of the strengths of ELA models, they present (1) a strong vision of college and career readiness standards, (2) a central, elevated location for writing within an integrated view of the language arts, (3) an informed view of progress in reading comprehension that extends beyond writing as a skill, and (4) shared responsibility for language instruction across the disciplines. Alert to the affordances of an ELA model, we now turn to a summary of our five research findings.

Finding 1: ELA research must begin with an integrated construct model sufficiently rich enough to capture what actually occurs in situated contexts. As decades of empirical research have illustrated, there is no evidence to support an impoverished view of reading and writing as mere skills-based activities. As well, there is no reason to accept the divisive partition of reading and writing because skills-based tests demonstrate increased evidence of reliability—even as they diminish construct representation and related validity measures. As Meghan A. Sweeney and Maureen McBride argue in their study of reading and writing connections as experienced by basic writers—a study, incidentally, that uses a design similar to ours—there is no reason to accept skills-based models when evidence such as that provided by the Institute of Education Sciences reveals that meta-strategies and cognitive processes exist in both reading and writing. As Steve Graham and his colleagues recommend in their evidence-based guide to student instruction, writing and reading skills are best learned when integrated. Indeed, this integration, the report authors conclude, helps students learn about important text features common to both reading and writing. Important to the adoption of an ELA framework is the unification of reading and writing under a shared framework of cognitive process and knowledge, including meta-knowledge, domain knowledge, text features, and procedural knowledge.

The need for a robust construct model—one sufficiently rich enough to capture what actually occurs in situated contexts—complements our observation that this study was conducted to advance opportunities for student learning through empirical research. More than a slogan, connections between robust construct modeling and student rights has now been advanced in a children’s rights model of literacy assessment. In its emphasis on “prospects for deep self-assessment,” we see that the intrapersonal domain is becoming recognized as central to instruction and assessment (Crumpler 123-124). None of what has been reported here could have been possible without a construct model designed to reflect the complexities of language. Figure 1 provides very specific variables to investigate, and this specificity lends itself to exact investigative targets—targets that are both research aims and opportunities to advance student learning.

Finding 2: An integrated construct model reveals readers in the process of constructing meaning through inquiry. As the Oxford English Dictionary reminds us, when meta is prefixed to the name of a subject or discipline, the new term then is used to “denote another which deals with ulterior issues in the same field, or which raises questions about the nature of the original discipline and its methods, procedures, and assumptions” (“meta”). As the present study demonstrates, the proposed meta-reading model, with its variables confirmed in Table 2, reveals readers in the process of constructing meaning through inquiry. We believe that the term “meta-reading”—the ability to reflect on one’s reading processes and make observations about those processes—is preferable to the broader term “meta-cognitive” in identifying the precise reflective skills, shown on the left of Figure 1, that are related to reading achievement. Once the meta-reading framework is adopted, we can understand more fully how, for instance, evaluative processes work. While it was not an aim of our study, the transcripts certainly seem amenable to heuristic research associated with Tversky and Kahneman. Imagine the contributions that could be made if we were to learn more about how experts make judgments on text in terms of the dual functions of intuition and strategy.

Finding 3: An integrated model reveals that learning to read involves complex cognitive and meta-cognitive processes. Readers draw on the cognitive abilities—mental processes accompanied by reading behaviors—shown on the right of Figure 1. As our generative research demonstrates, while cognitive abilities are easier to identify—perhaps because they are associated with observable behaviors that are readily explainable—the meta-reading processes, tacit and unobservable as they may be, are important. As Table 2 illustrates, our study of expert readers confirms that readers must both cognitively process text and reflect about methods, procedures, and assumptions those texts contain.

While beyond the scope of the present study, emphasis on the cognitive domain has been important to writing studies in seminal works originating with Flower’s The Construction of Negotiated Meaning: A Social Cognitive Theory of Writing. In terms of present language arts research, the cognitive domain theory advanced by Bennett, Deane, and van Rijen is extremely useful in adding depth to the cognitive variables identified in Figure 1. Such depth is not to be underestimated. As Bennett, Deane, and van Rijn have noted regarding ELA, the constructs have a “social character” in which “reading and writing support specific literacy practices, such as textual analysis, argumentation, research, and control of the writing process” (83). As they further observe, “The literature on the growth of expertise suggests that conceptual development can play a key role in skills development through an intervening variable—metacognitive awareness” (p. 85). This enriched understanding of the relationship between meta-cognitive and cognitive modeling can inform our understanding, in very specific ways, of the literacy practices we want our students to experience and demonstrate. Pedagogical concerns such as task construction, learning progression sequence, assessment, and validity claims can each be informed by cognitive modeling.

Finding 4: An integrated construct model reveals that, as is the case in learning to write, learning to read involves sociocultural processes. As James Paul Gee has observed, a sociocultural approach to learning places a premium on experiential processes, participation, mediating technologies, and communities of practice. Said one of our expert readers: “I mean I feel like I’m—I feel like when I sit down with—this is going to sound kind of cheesy now but I mean it: But when I sit down with one of these things I feel like I’m supposed to be reading as a representative of the community, so I feel like I have that voice in the back of my head.” Here we see evidence of meta-reading in terms of meta-contextual awareness—and evidence of cognitive behavior in terms of awareness of processes. The interaction of these two variables is most fully understood in terms of insights gained with attention to communities of practice. Recalling again Hyland and Jiang’s observation that writers’ stance is related both to their own responses to their topic and claims—as well as the degree of objectivity they impose—we see that sociocultural perspective helps us understand how a writer’s stance is constructed within the text.

Finding 5: An integrated construct model reveals that, as is the case in writing studies research, reading studies research benefits by a programmatic approach. Attention to variable modeling provides the basis for programs of research. It is especially important to note, for instance, the parallels of responses between the cognitive variables we identify in our study with the clues used for constructing author identity in Tardy and Matsuda. With the exception of audience, our list roughly corresponds to their categories of breadth of knowledge, choice of topic, use of genre conventions, use of sentence structure, and patterns in the citations of sources. Tardy and Matsuda conclude—and we agree—that their study has implications for readers and writers. “Our study suggests,” they conclude, “that such construction is shaped by the rhetorical task (evaluation), the subject position of the reader (reviewer, gatekeeper), and the reader’s own values (disciplinary, epistemological, style, etc.)” (47). To their findings we add that attention to research frameworks using metacognitive and cognitive domains may yield pedagogies supporting the socio-cognitive nature of reading as a deeply situated rhetorical act.

Implications for Instruction

In broad terms, we noted above, researchers in the field of writing studies explain the origin of their work, categorize the research design, and address pedagogical implications of their results. Our research follows this tradition. Faithful to praxis that characterizes our field, we conclude with instructional implications.

Lest we forget, this study is ultimately an investigation of the variables of peer review. In terms of the advancement of student learning, research on peer review has expanded enormously. Adam Loretto, Sara DeMartino, and Armada Godley present a thorough review of the role of peer review in K-12 writing instruction in their study of social positioning. In post-secondary writing, Joseph Moxley and David Eubanks provide a comprehensive review of the history of peer review in their study of rubric-based scores that students award to each other’s papers. Launched in 2011, a digital platform, MyReviewers, has been created to facilitate student learning while studying the cognitive, interpersonal, and intrapersonal domains associated with peer review and writing improvement. If we focus on peer review—how students can become more discerning readers of each other’s texts—we can better understand the implications of a program of research involving lessons learned from expert readers in order to strengthen ELA practices in classroom settings. Meta-activities, for example, might cultivate a rhetorical awareness of audience, with a focus on activities that examine how textual cues invoke audience. And activities involving cognitive modeling might ask students for textual examples that lead to claims and qualifications, thus emphasizing that meaning rests within word choice.

Under an ELA model presented in Figure 1, it may be worth consideration to design curricula and use peer-review rubrics reflecting that curricula based on the variables of our study shown in Table 3.

Table 3. A Meta-Cognitive Language Arts Model Based on Expert Readers

| Variable and Definition | Key Curricular Concept | Sample Rubric Question |

|---|---|---|

| Model 1: Meta-Reading Model | ||

| Meta-contextual: Conversational setting of Research | Syntactic combinations—how word and phrase structures used to signal context of the work of others and knowledge of readers and their experiences. | Identify how the writer identifies and limits scope and demonstrate reader and subject knowledge. |

| Meta-evaluative: Judgment of the value of research | Evaluation—how reasoned judgment is conveyed | How does the writer convey to the reader that a positive evaluation should be made of the work at hand? |

| Meta-linguistic: Writerly conventions of research | Semiosis—how language is used to shape ideas | Create a keyword list of critical concepts used in the paper and demonstrate how the writer defines and expands meaning. |

| Meta-textual: Identification of structure of research | Context—how the writer creates boundaries within the text to limit analysis | How does the writer signal the organization of ideas through word choice and graphic techniques? |

| Meta-analytic: Exposition of key concepts in research | Analytic structures—how analytic techniques are created and maintained | Draw the trajectory of the paper and demonstrate how claims are supported and qualified. |

| Model 2: Cognitive Model | ||

| Process: Explanation of Protocols | Generative structures—how cues are given to signal organizational patterns | Identify key words that are used to explain how the research was conducted and the limits and benefits of the identified method. |

| Audience: Identification of readers of research | Genre conventions—how the writer structures roles for readers (audience) to play in the text | Based on the strategy of the writer, identify how personas are created for the reader at the level of word choice and tell how well these personas are served by that vocabulary. |

| Scholarly Integration: Literature review into the research | Integration—how the writer extends traditional citation practices to shape ideas | Identify boosters, hedges, and arguing expressions surrounding citations and demonstrate how they are used to create inclusiveness. |

| Purpose: Aim of research | Intention—how the writer signals intention | Identify how the writer demonstrates the aim and how that intention is continued throughout. |

| Topic: Category of research | Disciplinary awareness—how the writer signals research limits | Explain how the writer demonstrates the limits of the analysis and why these limits were drawn. |

| Style: Knowledge of conventions | Style—how conventions of genre are achieved | Select key passages and demonstrate how the writer exhibits familiarity with the genre at hand. |

| Structure: Cohesion of Research | Cohesion—how the writer uses lexical and textual devices to create structure | Identify the labeling and textual connective process that the writer uses to demonstrate continuity of thought. |

| Time: Reader commitment of review of research | Time—how the reader allocates time for review and judges that time wasted or beneficial | Report if the time spent in review was well allocated, including statements of your own preparedness as well as that of the writer. |

| Methodological Selection: Choice of protocols for research | Research Methodology—how the reader demonstrates choice of research method | Identify the section in which the writer justifies the research strategy and tells how the research was conducted. |

| Topic Specific Vocabulary: Use of specialized knowledge of research | Contextualized Word Choice—how the writer tailors word choice to topic | Identify vocabulary used to signal to the reader that a specialized vocabulary is needed in the case of the topic at hand. |

Variables associated with meta-reading practices could be used, for example, to help peer reviewers identify meta-contextual strategies the writer uses to establish the setting of research in the context of other work and reader knowledge/experience that is critical to source-based writing. In similar fashion, students could be taught to identify meta-linguistic elements used to focus reader attention on syntactic combinations—word and phrase structures—used to signal cohesion. These approaches to instruction are especially valuable in terms of corpus-based techniques lending to new pedagogies. These techniques, emphasizing linguistic awareness, are especially significant to matters of transfer, as Laura Aull has forcefully argued in her study of first-year writing and linguistically informed pedagogical applications. Similarly, cognitive variables can be used to help students learn to identify a wide variety of writing, reading, and critical analysis experiences identified by the Framework for Success in Postsecondary Writing (Council of Writing Program Administrators, National Council of Teachers of English, and National Writing Project) and the WPA Outcomes Statement 3.0 (Council of Writing Program Administrators). While instruction associated with these frameworks has demonstrated student gains (Elliot, et al.; Kelly-Riley, et.al), the emphasis on agency in the sample rubric questions allows students to read for textual patterns. Observations of students using these variables create an occasion to study the intrapersonal and interpersonal domains associated with learning to write under an ELA framework. Table 3 is but an instance of the instructional benefits derived from the study of expert readers in which situational socialization, tacit knowledge organization, and acculturated professionalism may serve as key principles to advance student learning.

The metaphor used to drive this study has allowed us to trace an especially elusive current: the role of expert review in writing studies research. Following that current led to other insights, including evidence for the usefulness of an ELA model combining both reading and writing. As Table 3 illustrates in a very limited way, studies such as ours hold the potential to inform curriculum design, task construction, learning sequence, evidence gathering in terms of validity, reliability, and fairness, and use of information to structure opportunities for students. While bottles such as ours will, hopefully, continue to provide information in its meaningful drift, its message is clear: Just as there is a need for reader-based writers, there is an equal need for writer-based readers.

Author Note: The research reported here was performed with the following IRB approvals: Horning, Oakland University, IRB 5111; Elliot, New Jersey Institute of Technology, IRB E154-13.

Notes

- Paper authors also gave their consent for the use of their work in the study. The original reviews were blind. (Return to text.)

- While the number of participants in the study is small, the number of comments in the sample is large enough to warrant the use of inferential statistics to support inter-reader reliability. (Return to text.)

Works Cited

Applebee, Arthur N. Common Core State Standards: The Promise and the Peril in a National Palimpsest. English Journal, vol. 103, no. 1, 2013, pp. 25-33.

Aull, Laura. First-Year University Writing: A Corpus-Based Study with Implications for Pedagogy. Palgrave Macmillan, 2015.

Basit, Tehmina. Manual or Electronic? The Role of Coding in Qualitative Data Analysis. Educational Research, vol. 45, no. 2, 2003, pp. 143-154.

Beaufort, Anne. College Writing and Beyond: A New Framework for University Writing Instruction. Utah State UP, 2007.

---. College Writing and Beyond: Five Years Later. Composition Forum, vol. 26, 2012. http://compositionforum.com/issue/26/college-writing-beyond.php.

Bennett, Randy E., Paul Deane and Peter W. van Rijn. From Cognitive-Domain Theory to Assessment Practice. Educational Psychologist, vol. 51, no. 1, 2016, pp. 87-107.

Bergman, Linda S., and Janet Zepernick. Disciplinary and Transfer: Students’ Perceptions of Learning to Write. WPA: Writing Program Administration, vol. 31, no. 1-2, 2007, pp. 124-149.

Bernard, H. Russell. Social Research Methods: Qualitative and Quantitative Approaches. 2nd ed., Sage, 2013.

Carletta, Jean. Assessing Agreement on Classification Tasks: The Kappa Statistic. Computational Linguistics, vol. 22, no. 2, 1996, pp. 249-254.

Collins, Harry and Robert Evans. Rethinking Expertise. U of Chicago P, 2007.

Council of Writing Program Administrators. WPA Outcomes Statement for First-Year Composition. Revisions adopted 17 July 2014. WPA: Writing Program Administration, vol. 38, no. 1, 2014, 142-146.

Council of Writing Program Administrators, National Council of Teachers of English, and National Writing Project. Framework for Success in Postsecondary Writing. Council of Writing Program Administrators, the National Council of Teachers of English, and National Writing Project, 2011. http://wpacouncil.org/files/framework-for-success-postsecondary-writing.pdf. Accessed 1 Nov. 2017.

Crumpler, Thomas P. Standing with Students: A Children’s Rights Perspective of Formative Literacy Assessments. Language Arts, vol. 95, no, 2, 2017, pp. 122-125.

Donahue, Christiane, and Lynn Foster-Johnson. Liminality and Transition: Text Features in Postsecondary Student Writing. Research in the Teaching of English, vol. 52, no. 4, 2018, pp. 359-381.

Elliot, Norbert, et al. ePortfolios: Foundational Measurement Issues. Journal of Writing Assessment, vol. 9, no. 2, 2016. http://journalofwritingassessment.org/article.php?article=110. Accessed 1 Nov. 2017.

Flower, Linda. The Construction of Negotiated Meaning: A Social Cognitive Theory of Writing. Southern Illinois UP, 1994.

Frazier, Dan. First Steps Beyond First Year: Coaching Transfer after FYC. WPA: Writing Program Administration, vol. 3, no. 3, 2010, pp. 34-57.

Gee, James Paul. A Sociocultural Perspective on Opportunity to Learn. Assessment, Equity, and Opportunity to Learn, edited by Pamela A. Moss, et al. Cambridge UP, 2008, pp. 76-108.

Graham, Steve, et al. Teaching Elementary School Students to be Effective Writers: A Practice Guide, NCEE 2012-4058. Washington, DC: National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education, 2012. http://ies.ed.gov/ncee/ wwc/publications_reviews.aspx#pubsearch. Accessed 1 Nov. 2017.

Graham, Steve, et al. Teaching Secondary Students to Write Effectively, NCEE 2017-4002. Washington, DC: National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education, 2016. http://whatworks.ed.gov. Accessed 1 Nov. 2017.

Hillesund, Terje. Digital Reading Spaces: How Expert Readers Handle Books, the Web and Electronic Paper. First Monday, vol. 15, nos. 4-5, 2010, http://firstmonday.org/article/view/2762/2504. Accessed 1 Nov. 2017.

Hinsdale, Burke Aaron. The Teaching of Language Arts: Speech, Reading, and Composition. D. Appleton, 1896. https://www.hathitrust.org/. Accessed 1 Nov. 2017.

Horning, Alice S. Reading, Writing and Digitizing: Understanding Literacy in the Electronic Age. Cambridge Scholars, 2012.

Hyland, Ken and Feng Jiang. Change of Attitude? A Diachronic Study of Stance. Written Communication, vol. 33, 2016, pp. 251-274. DOI: 10.1177/0741088316650399. Accessed 1 Nov. 2017.

Institute of Education Sciences. What Works Clearinghouse™ Procedures and Standards Handbook Version 4.0. U.S. Department of Education, 2017. https://ies.ed.gov/ncee/wwc/Docs/referenceresources/wwc_procedures_handbook_v4.pdf. Accessed 1 Nov. 2017.

Irving, Doug. Big Data, Big Questions. https://www.rand.org/blog/rand-review/2017/10/big-data-big-questions.html. Accessed 1 Nov. 2017.

Jarratt, Susan C., et al. Pedagogical Memory: Writing, Mapping, Translating. WPA: Writing Program Administration, vol. 33, no. 1-2, 2009, pp. 46-73.

Juzwik, et al. Defining and Doing the ‘English Language Arts’ in Twenty-First Century Classrooms and Teacher Education Programs. Research in the Teaching of English, vol. 51, no. 2, 2016, pp. 125-240.

Kelly-Riley, et al. An Empirical Framework for ePortfolio Assessment. International Journal of ePortfolio, vol. 6, no. 22, 2016, pp. 95-116. http://www.theijep.com/pdf/IJEP224.pdf. Accessed 1 Nov. 2017.

Lamont, Michèle. How Professors Think: Inside the Curious World of Academic Judgment. Harvard UP, 2009.

Lieu, Donald J., et al. Writing Research through a New Literacies Lens. Handbook of Writing Research, 2nd ed., edited by Charles A. MacArthur, Steve Graham, and Jill Fitzgerald. Guilford, 2016, pp. 41-53.

Loretto, Adam, Sara DeMartino, and Armada Godley. Secondary Students’ Perceptions of Peer Review of Writing. Research in the Teaching of English, vol. 51, no. 2, 2016, pp. 134-161.

MacArthur, Charles A., and Steve Graham. Writing Research from a Cognitive Perspective. Handbook of Writing Research, edited by Charles A. MacArthur, Steve Graham, and Jil Fitzgerald, Guilford Press, 2016, pp. 24-40.

meta-, prefix, n.2.a(a). OED Online. Oxford University Press, March 2017. Web. 6 June 2017.

Moxley, et al. Writing Analytics: Conceptualization of a Multidisciplinary Field. Journal of Writing Analytics, vol. 1, 2017, pp. v-xvii. https://journals.colostate.edu/analytics/article/view/153/95. Accessed 1 Nov. 2017.

Moxley, Joseph M., and David Eubanks. On Keeping Score: Instructors’ vs. Students’ Rubric Ratings of 46,689 Essays. WPA: Writing Program Administration, vol. 39, no. 2, 2016, pp. 53-80.

National Research Council. Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century, edited by Committee on Defining Deeper Learning and 21st Century Skills, James W. Pellegrino & Margaret L. Hilton, Board on Testing and Assessment and Board on Science Education, Division of Behavioral and Social Sciences and Education. The National Academies P, 2012.

---. How Students Learn: History, Mathematics, and Science in the Classroom, edited by Committee on How People Learn, A Targeted Report for Teachers, M. Suzanne Donovan and John D. Bransford, Editors. Division of Behavioral and Social Sciences and Education. The National Academies Press, 2005.

Nelms, Gerald, and Ronda Leathers Dively. Perceived Roadblocks to Transferring Knowledge from First-Year Composition to Writing-Intensive Major Courses: A Pilot Study. WPA: Writing Program Administration, vol. 31, no2. 1-2, 2007, pp. 214-240.

Odell, Lee, Dixie Goswami, and Anne Herrington, A. The Discourse-Based Interview: A Procedure for Exploring the Tacit Knowledge of Writers in Nonacademic Settings. Research on Writing: Principles and Methods, edited by Peter Mosenthal, Lynne Tamor, and Sean A. Walmsley, Longman, 1983, pp. 221-236.

Ong, Walter. The Writer’s Audience is Always a Fiction. Publications of the Modern Language Association, vol. 90, no. 1, 1975, pp. 9-21.

O’Reilly, Tenaha, and Kathleen M. Sheehan. Cognitively Based Assessment of, for and as Learning: A Framework for Assessing Reading Competency. ETS RR-09-26. Educational Testing Service, 2009. http://ets.org/Media/Research/pdf/RR-09-26.pdf. Accessed 1 Nov. 2017.

Phelps, Louise Wetherbee and John W. Ackerman. Making the Case for Disciplinarity in Rhetoric, Composition, and Writing Studies: The Visibility Project. College Composition and Communication, vol. 62, no. 1, 2010, pp. 180-215.

Poe, Mya, et al., editors. Writing Assessment, Social Justice, and the Advancement of Opportunity. Perspectives on Writing. The WAC Clearinghouse and UP of Colorado, 2018. https://wac.colostate.edu/books/perspectives/assessment/.

Rand Reading Study Group. Reading for Understanding: Toward an R&D Program in Reading Comprehension. Rand Corporation, 2002. http://www.rand.org/. Accessed 1 Nov. 2017.

Strauss, Anselm, and Juliet J. Corbin. Grounded Theory Methodology: An Overview. Strategies of Qualitative Inquiry, edited by Norman K. Denzin and Yvonna S. Lincoln. Sage, 1998, pp. 158-183.

Sweeney, Meghan A., and Maureen McBride. Difficulty Paper (Dis)Connections: Understanding the Threads Students Weave between Their Reading and Writing. College Composition and Communication, vol. 66, no. 4, 2015, pp. 591-614.

Sword, Helen. Stylish Academic Writing. Harvard UP, 2012.

Tardy, Christine W., and Paul Kei Matsuda. The Construction of Author Voice by Editorial Board Members. Written Communication, vol. 26, no.1, 2009, pp. 32-52.

Tversky, Amos, and Daniel Kahneman, D. Availability: A Heuristic for Judging Frequency and Probability. Cognitive Psychology, vol. 5, 1973. pp. 202-32.

VanKooten, Crystal. Identifying Components of Meta-Awareness about Composition: Toward a Theory and Methodology for Writing Studies Composition Forum, vol. 33, 2016, http://www.compositionforum.com/issue/33/meta-awareness.php. Accessed 1 Nov. 2017.

Ware, Mark, and Mike Monkman. Peer Review in Scholarly Journals: An International Study into the Perspective of the Scholarly Community. Mark Ware Consulting, 2008. http://www.publishingresearch.net/documents/PeerReviewFullPRCReport-final.pdf. Accessed 1 Nov. 2017.

Yancey, Kathleen Blake, Liane Robertson, and Kara Taczak. Writing Across Contexts: Transfer, Composition, and Sites of Writing. Utah State UP, 2014.

Message in a Bottle from Composition Forum 40 (Fall 2018)

Online at: http://compositionforum.com/issue/40/message-bottle.php

© Copyright 2018 Norbert Elliot, Alice Horning, and Cynthia Haller.

Licensed under a Creative Commons Attribution-Share Alike License.

Return to Composition Forum 40 table of contents.