Composition Forum 40, Fall 2018

http://compositionforum.com/issue/40/

When Rubrics Need Revision: A Collaboration Between STEM Faculty and the Writing Center

Abstract: Students who receive instruction in discipline-specific communication perform better in introductory and upper-level STEM courses. In this study, researchers investigate how writing center intervention can aid STEM faculty in revising assignment rubrics and conveying to students the discourse conventions and expectations for writing tasks. The results suggest that the writing center, though often discussed and marketed as a student support service, can fill a gap by providing support to faculty.

Intro

Conversations among composition instructors, writing center representatives, and faculty members often begin with the familiar discussion of why students in {insert discipline here} still can’t write, even after successfully completing composition courses. It’s a topic that simultaneously puts composition instructors and writing center representatives on the defense while instigating a re-evaluation and reflection of personal pedagogy for all involved. We know that students must be able to communicate in the discipline’s preferred discourse to comprehend discipline-specific content (Martin; Unsworth), but faculty, including those in science, technology, engineering, and math (STEM) fields, often struggle to teach their discipline’s discourse features due to the difficulty of determining the formal rhetoric of the field (Fahnestock; Walsh). Additionally, faculty immersed in a discipline's discourse often struggle with the task of evaluating which field- and course-specific discourse expectations must be explicitly articulated to students who are less familiar with the discourse features.

From these conversations emerged an interdisciplinary project that incorporated writing center representatives in an introductory STEM course. Specifically, our case study focused on writing center tutor intervention in an introductory Geology course for non-majors. Unlike many courses with writing fellows or embedded tutors, this course was not designated as a writing intensive (WI) course, and was therefore not associated with the Writing Across the Curriculum (WAC) program on campus. Despite the course not being designated writing intensive, the STEM faculty member noted that students’ inability to communicate using the discourse of science impeded their mastery of course content. Of immediate concern for the STEM faculty member was his students’ performance on written short-answer questions for exams. He noted that his students would write around the question, using large, incorrect terminology and often answering a different question altogether.

In response to the query of how to assist students who struggle to write in the disciplines, an answer often posited by faculty and administrators alike is simply, “send your students to the writing center.” Of course, for writing center administrators, that answer - send your struggling students to the writing center—leads to many more questions. If composition instructors cannot produce successful writers across the disciplines, what can writing center tutors (who are often undergraduate and graduate students) achieve that the more seasoned professionals cannot? How can the writing center fill this gap in student achievement, and how can we prepare student tutors to take on this difficult and important work? What is the STEM student’s responsibility, what is the composition instructor’s, what are the writing tutors’, and what is the STEM professor’s?

Our project developed out of these inquiries. Our research focused primarily on the way writing center intervention can lead to the revision of a STEM faculty member's elucidation of written guidelines. Two formal research questions guided our work during the Fall 2015 semester:

- How accurately do the rubrics utilized for writing evaluation in STEM courses express the expectations for written discourse to the students?

- How can generalist writing center intervention (tutors who are trained in composition and writing center theory, but not in the specific disciplinary content present in students’ papers) specifically aid STEM faculty, resulting in revisions to the written assignment sheets and rubrics given to students?

Because the STEM course we studied was not designated as a writing intensive course, outside of the atypical intervention from the writing center during the Fall 2015 semester, the students’ primary means of writing instruction came from the expectations described on the rubric distributed by the STEM faculty member. This is the case for many students in courses across the disciplines where small writing assignments, such as short answer questions on exams, are assigned, though the course does not focus explicitly on writing instruction. Rubrics, too, are of immediate concern to those working with students in the writing center. Though tutors are trained with strategies for aiding students writing in the disciplines, tutors come from a variety of disciplines—on our campus, often English—and therefore, tutors’ abilities to aid students with their writing assignments depends greatly upon the written instructions (assignment sheets and rubrics) that students produce from their courses. Clarity and precision in faculty’s written course materials, therefore, is a key factor that can lead to the student's ability to succeed (or not) in writing assignments for a particular course.

This case study showed that writing center intervention assists faculty in articulating discipline-specific values in their own written assignment sheets and rubrics, which benefits student learning and writing. While embedded tutoring may not be feasible for all writing centers or all STEM classes, this study demonstrates that writing center intervention does benefit STEM faculty and students by promoting the improvement of teaching and learning. This case study demonstrates that when writing centers work directly with faculty, students’ familiarity with field-specific discourse conventions and expectations for writing tasks improve. Additionally, the study provides a model for independent faculty development of rubrics, in acknowledgment that writing centers most often do not have the resources to provide embedded tutoring or other specific supports to individual courses.

Literature Review

In writing center scholarship, the writing center has long been much more than just a physical space. In Stephen North's touchstone essay “The Idea of the Writing Center,” the aim of the writing center’s pedagogy and praxis is the production of “better writers, not better writing” (438). Often, this aim is accomplished in a location on campus or online referred to as the “Writing Center.” Writing center tutors, though, have left this physical space and have been integrated into composition and WAC classrooms. Embedded tutors (or writing fellows)—tutors who work with individual faculty members across disciplines in the classroom environment—function as bridges between writing centers and WAC faculty (Kinkead, et al.; Spigelman and Grobman; Hall and Hughes; Dvorak, Bruce, and Lutkewitte; Hannum, Bracewell, and Head). Likewise, classroom interventions, such as tutor talks, are typical services that writing centers provide in the context of classroom sessions (Ryan and Kane). Much of the scholarship surrounding embedded tutoring is tied to the WAC or composition classroom; likewise, the primary focus of these embedded tutors or writing fellows is aiding the development of student writers, though revisions to faculty writing for students are often noted as additional benefits to these programs.

Students outside of WAC and composition classrooms need to negotiate the linguistic demands of their discipline, even when they are not given explicit, formal writing assignments. On our campus, many STEM courses are designated as writing intensive and are therefore associated with WAC, but many more are not; despite this lack of designation and association, however, students must negotiate the demands of scientific discourse in order to succeed in learning the course content. Likewise, faculty members outside of WAC programs still need to carefully consider the language of the prompts, rubrics, and other instructional materials they provide when assigning any sort of evaluation that involves written discourse.

For students in science courses, learning the conventions of scientific discourse is not only crucial for communicating that knowledge in writing or speech; it is also essential to learning and understanding the content proliferated in a science course (Martin). The language of science differs from the language of the humanities, and that difference is not arbitrary or aesthetic; that difference points to the values essential to the field itself. For students, gaining access to the literacies valued in the sciences is crucial to assimilating the content taught in science courses (Unsworth). As students practice and gain necessary help and instruction for STEM written communication in their initial early introductory courses, they will perform better in both introductory and upper-level STEM courses.

Because many STEM courses are not designated as WAC courses, and because many of these courses do not have explicit out-of-class writing assignments, students will often not consider making writing center appointments to prepare for written components of exams such as short-answer questions. Though it may seem to students that they are simply being tested on their knowledge of a subject, when students are asked to complete test questions like the short-answer variety, they are being evaluated on their written communication skills as well as their knowledge of the course content. The former sphere of knowledge can impede the student’s ability to demonstrate the latter, and the development of the former skill set—the ability to communicate content in a discipline’s preferred discourse—is certainly within the purview of the writing center.

Embedded tutoring is beneficial not only for students who are expected to compose in the disciplines but also for faculty assigning writing in the disciplines. As Dustin Hannum, Joy Bracewell, and Karen Head note with regards to their embedded tutoring experiences, “ we aim to bring this experience from the center to the instructors in their own classrooms—creating situations where we help them become better teachers and communicators while also helping their students” (par. 7). A benefit of embedded tutoring, according to Emily Hall and Bradley Hughes, is that it allows faculty to “ reflect critically on their own practices of designing writing assignments” (21). Faculty, too, need to consider their role in their student’s ability to succeed when composing their own assignment sheets and grading criteria. Dory Hammersley and Heath Shepard identify vague written instructions as a frequent problem for writing tutors who work with students composing across the disciplines. Students and tutors alike often report difficulty fulfilling assignment guidelines due to “unclear assignments”; a solution for this problem, Hammersley and Shepard note, could involve the creation of grading rubrics.

For our research study, writing center representatives worked closely with students and with the STEM faculty member to aid in the interpretation, and later revision of, assignment sheets and rubrics. These representatives, acting as intermediaries and interpreters of the values in a STEM discipline, proved beneficial to student success in mastering course content (based on students’ increased success in correctly answering short-answer exam questions), and perhaps most notably, their work resulted in a substantial change to the STEM faculty member’s course writing guidelines. Writing center intervention, then, led to not just student writing revisions, but to significant revisions made by the STEM faculty member to rubrics and grading practices.

Methods

Prior to the beginning of the Fall 2015 semester, writing center representatives met with the instructor of GLY 200: Physical Geology for non-majors, and the representatives visited the STEM course three times during the Fall 2015 semester. The class consisted of forty undergraduate students; each of the students had either taken English 101: Introduction to Composition in the past or were taking it that same semester. For the three visits during the semester, the writing center representatives visited during the first week of class when students were completing an in-class writing assignment, prior to a major exam that contained short-answer questions, and following the major exam.

Of these four writing center interventions (one prior to the course and three during the course), the most significant to this research are the two initial writing center interventions during this course. From these interventions, writing center representatives reviewed an assignment rubric with the faculty member prior to the course beginning and then early during the semester evaluated student writing and the STEM faculty member's feedback on that writing. They also compared both the student writing and the faculty member’s feedback on the student writing to the written expectations elucidated in the STEM faculty member's rubric. These interventions provided valuable information regarding the disconnect between the STEM faculty member's expectations (as made manifest in actual grading practices) and the rubric created by the STEM faculty member.

Considering the first intervention (prior to the beginning of the semester), the STEM faculty member submitted a rubric for short-answer writing to the writing center representatives to review and comment upon (Table 1).

Table 1. Initial rubric for Geology 200

| Levels of Achievement | ||

|---|---|---|

| Criteria | Inadequate | Adequate |

| Completeness |

0 to 1 points

Submitted answers are greatly lacking in their level of completeness - i.e. very little written, or possibly only one minor portion of the question is answered. |

1 to 2 points

Attempts to answer all parts of question. |

| Knowledge Demonstrated |

0 to 1 points

Little attempt made to interpret and paraphrase/summarize the information from the text book, and large majority of answer is incorrect. |

1 to 2 points

Majority of answer is correct, good attempt made to interpret and paraphrase/summarize information from text book, and to understand the material. |

| Grammar |

0 to 1 points

Submitted answer very poorly written with no regard given to proper grammar. Sentences incomplete, incorrect spelling, etc. |

1 to 1 points

Very well written, little to no spelling/grammatical mistakes or incomplete sentences. |

There were two major pieces of feedback that the writing center representatives gave the STEM instructor regarding his evaluation of the initial rubric. First, the representatives suggested reordering the levels of achievement demonstrated, beginning with “adequate” on the left and “inadequate” on the right (see Table 2). This suggestion, though small, stemmed from a concern with the psychology of the student composer reviewing assessment standards. Because English-speaking students read from left to right, we were concerned that the first information students received regarding the standards for their compositions were framed in the negative. Standard one from the National Council of Teachers of English (NCTE) Standards for the Assessment of Student Writing discusses the importance of cultivating assessment situations that encourage self-reflection, as opposed to defensiveness (Johnston, et al.). We believed that the re-organized spatial arrangement of the rubric could better achieve this goal. Next, a new criterion was added to the rubric: extraneous information (see Table 2). In the previous rubric, this area of concern was assumed under the criterion “completeness.” However, the description under the aforementioned criterion did not fully describe expectations for completeness and/or concision. Through conversations, all parties involved realized that completeness and concision were separate, though related, expectations for students composing in the discipline. Accordingly, the new criterion was added, and the point values for the existing criteria were reevaluated to better represent the STEM instructor’s desired areas of focus for the assignment. The criterion of knowledge demonstrated was assigned up to two points, while completeness, extraneous information, and grammar were each worth up to one point (see Table 2). Partial points (in half-point increments) were possible for each criterion. The revised rubric was submitted to students at the beginning of the course to explain the writing expectations and grading criteria for their written responses on in-class writing and exam prompts.

Table 2. Revised (but not final) rubric for Geology 200

| Levels of Achievement | ||

|---|---|---|

| Criteria | Adequate | Inadequate |

| Knowledge Demonstrated |

1 to 2 points

Majority of answer is correct, good attempt made to interpret and paraphrase/summarize information from text book, and to understand the material. |

0 to 1 points

Little attempt made to interpret and paraphrase/summarize the information from the text book, and large majority of answer is incorrect. |

| Completeness |

0.5 to 1 points

Attempts to answer all parts of question. |

0 to 0.5 points

Submitted answers are greatly lacking in their level of completeness - i.e. very little written, or possibly only one minor portion of the question is answered. |

| Extraneous Information |

0.5 to 1 points

None to very little extraneous information. Information written in answer is focused and to the point. |

0 to 0.5 points

Answer is largely composed of extraneous information that does not support a focused answer. Also includes answers that are vaguely written. |

| Grammar |

0.5 to 1 points

Very well written, little to no spelling/grammatical mistakes or incomplete sentences. |

0 to 0.5 points

Submitted answer very poorly written with no regard given to proper grammar. Sentences incomplete, incorrect spelling, etc. |

Following the evaluation and revision of the written rubric, the writing center representatives visited the STEM classroom. The goal of the intervention was to help students learn how to read writing prompts (the same sort of prompts they would receive in the short-answer section of exams) and learn how to interpret the professor’s rubric and tailor their written responses to fulfill the assignment criteria. The sample prompt discussed during the first classroom visit was as follows: What are the three types of convergent plate boundaries? How will interactions of the two crustal types and differences in buoyancy result in each of the convergent boundary types? With the writing center representatives, students broke the prompt down into manageable chunks; they made an outline of the layered questions synthesized in the prompt and the information that needed to be answered in their response. The students also reviewed the rubric (Table 2).

After a discussion about the prompt itself and the rubric, students were directed to answer the prompt, individually in class, over the course of ten minutes. Students were reminded to keep the rubric criteria in mind when answering the prompt. After writing individually, students were then placed in small peer review groups. In groups, students compared their responses with one another’s and then crafted a single group response, pulling from the strongest parts of their individual responses and revising as needed. As students were working on their revisions, the writing center representatives circulated from group to group, reading responses and providing additional feedback and suggestions for revision.

Following this group work, students were asked to share with the whole class the revisions they composed as a group while working with the embedded tutors. Several students volunteered to read their initial and revised responses. As students contributed their own compositions, the representatives probed students’ revision decisions by asking students to explain how their revisions connected back to their clarified understanding of the original prompt and/or rubric.

The cohort of individuals from the writing center concluded their first classroom visit with a discussion of the values of the discipline of Geology, as made manifest in the rubric (a discourse that privileges concision, objectivity, and accuracy). The writing center representatives then encouraged students to continue their work on their own writing for the course by visiting the campus writing center, and they detailed the online and face-to-face services available for students.

Following the class visit, the STEM faculty member evaluated the students’ revised responses according to the rubric provided. The writing center cohort collected these responses and evaluated the relationship between the STEM faculty member’s evaluation—rubric scores and written feedback—and the criteria listed on the rubric.

The two additional course visits writing center representatives made to the STEM classroom—prior to a major exam with short-answer questions and following the major exam—were not considered in the context of this study because the writing center representatives focused on interventions with students during those visits rather than with the STEM faculty member.

Results

Table 3 describes the relationship between the errors marked and the criteria listed on the rubric. When reviewing the STEM faculty member’s evaluation of the students’ responses, the writing center representatives determined that the STEM professor marked errors relating to content (the completeness and correctness of the students’ answers) as well as grammar (the adherence to basic rules of spelling, diction, syntax, tone, and organization), as was suggested on the rubric. The STEM faculty member’s comments identified nine skills that the students either successfully or unsuccessfully demonstrated in their responses. The writing center representatives organized these skills according to the criteria listed in the rubric (see Table 3). Errors related to definition and analysis fell under two criteria in the rubric: knowledge demonstrated and completeness. For example, if the STEM faculty member identified that analysis was missing for one portion of a group’s response, the faculty member deducted points from the knowledge demonstrated and/or completeness categories, depending on the significance of the absence. Thus, for a single error, a student could lose points in two or more categories (considering the potential for extraneous information and grammar errors as well). Errors relating to extraneous information were assigned to the extraneous information criterion. The STEM faculty member marked six distinct error types within the grammar criterion: word choice, spelling, syntax, organization, tone, and precision.

Table 3. Criteria in rubric, point values, and associated skills

| Criteria listed in rubric (point value) | Associated skills |

| Knowledge Demonstrated (0-2 points) | Definition, analysis |

| Completeness (0-1 points) | Definition, analysis |

| Extraneous Information (0-1 points) | Extraneous information |

| Grammar (0-1 points) | Word choice, spelling, syntax, organization, tone, precision |

Patterns of Feedback

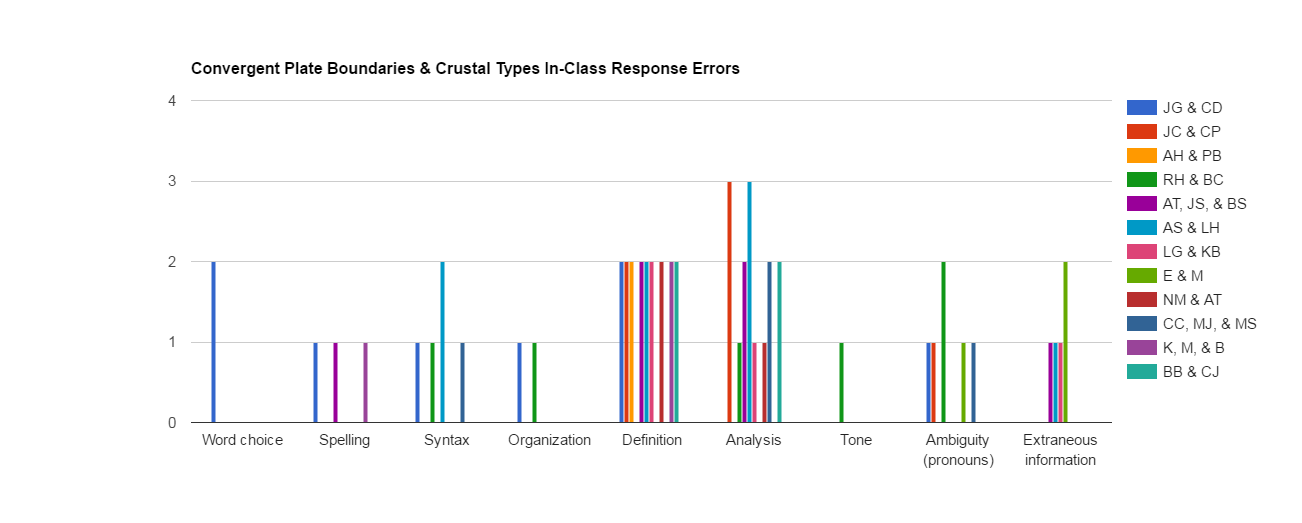

Figure 1 displays the patterns of the STEM instructor’s feedback to the students’ responses, which were identified through an assessment of the instructor’s deduction of points and associated comments. The highest percentage of feedback related to the knowledge demonstrated and completeness criteria on the rubric—categories that were necessarily considered together, given that the evaluated skills of definition and analysis fell under both categories. The STEM faculty member marked 33 errors related to the knowledge demonstrated and completeness criteria (58% of total errors). This feedback identified incorrect or missing definitions (32% of the total errors) and analysis (26% of the total errors). The STEM faculty member marked 5 errors related to the extraneous information (9% of total errors) and 19 errors related to the grammar criterion (33% of total errors).

Figure 1. Patterns of feedback on in-class responses

Reviewing the percentages of error in the context of the rubric revealed a disproportionate focus on errors related to extraneous info and grammar. While the extraneous info criterion was worth 20% of the potential points a student could earn on a short-answer response, the instructor’s error notations and comments noted only 9% of the total errors came from this category. Conversely, while the grammar criterion accounted for 20% of the potential points a student could earn on a short-answer response, 33% of the instructor’s error notations or comments focused on grammar. The instructor’s focus on knowledge demonstrated and completeness was more balanced, with 60% of the potential points a student could earn on a short-answer response coming from these criteria and 58% of the instructor’s error notations or comments focused on these criteria.

Intervention with STEM Instructor

Writing center representatives presented to the STEM instructor their findings regarding the patterns of identifying errors and the disproportionate focus on extraneous information and grammar errors. The representatives additionally discussed with the instructor errors that were incorrectly identified. Specifically, the STEM instructor commented to several students that their responses contained run-on sentences that were, in fact, grammatically correct. The discussion revealed that while the STEM instructor’s use of terminology (the labeling of run-on sentences) was inaccurate, the instructor accurately identified in the responses a failure to adhere to STEM writing conventions as related to syntax (the use of independent clauses and simple sentence structures).

The writing center intervention prompted the STEM instructor to revise his rubric to outline more clearly the expectations for STEM writing in terms of content and grammar (see Table 4). The final, revised rubric contained the following changes:

- One criterion was renamed (extraneous information changed to focus).

- One criterion was deleted (grammar).

- The levels of achievement were renamed and an intermediary level of achievement was added. Rather than identifying students’ adherence to the criteria as “adequate” or “inadequate” (see Table 2), the revised rubric identified students’ adherence to the criteria as “proficient,” “competent,” or “novice” (see Table 4).

- The descriptions for the proficient (formerly adequate) and novice (formerly inadequate) levels of achievement were revised. Slight wording changes were made to the proficient and novice levels of achievement in the knowledge demonstrated and completeness criteria. More substantial wording changes were made to the focus (formerly extraneous information) criterion.

Table 4. Revised and final rubric for Geology 200

| Levels of Achievement | |||

|---|---|---|---|

| Criteria | Proficient | Competent | Novice |

| Knowledge Demonstrated |

1.5 to 2 points

Majority of answer correct; good attempt made to interpret, paraphrase, and summarize information from lab book and to understand material. |

1 to 1.5 points

Some parts of answer correct; some attempts to interpret, paraphrase, and summarize information from lab book and to understand material. |

0 to 1 points

Answer largely incorrect; little to no attempt made to interpret, paraphrase, and summarize information from lab book or to understand material. |

| Completeness |

1.5 to 2 points

All parts of question clearly addressed. |

1 to 1.5 points

Only select portions of questions are answered. Approximately half of question is addressed. |

0 to 1 points

Largely incomplete answer - little written, only a minor portion of question addressed. |

| Focus |

0.5 to 1 points

Answers are to the point and include only relevant information that supports answer. |

0.25 to 0.5 points

Some parts of answer are vaguely written or include some extraneous information that does not support answer. |

0 to 0.25 points

Largely extraneous information that does not help to answer question; vaguely written answer. |

The first revision, changing the extraneous information criterion to focus, attended to an issue that revealed itself in the initial rubric (Table 1) and continued into the first rubric revision (Table 2). Neither rubric fully described expectations for concision and precision in students’ writing. This continued revision of the criterion—from completeness (Table 1) to extraneous information (Table 2) to focus (Table 4)—worked to better represent the STEM instructor’s desired areas of focus for writing tasks.

The second change, the deletion of the grammar criterion, developed in response to the writing center representatives’ comments about the conditions of the writing situation and their discussion with the STEM instructor regarding students whose first language is not English. The representatives pointed out that because students were writing by hand, without access to spell- or grammar-check software, during a timed writing activity, and on future exams, without the opportunity for revision, common grammar errors (such as spelling mistakes) could be expected. Referencing the NCTE's Standards for Assessment in Reading and Writing concerning fairness and equity (standard 6), the writing center representatives additionally noted that the grammar criterion assumed a mastery of basic language skills that, in practice, had not been achieved by all students, particularly non-native English speakers (Johnston, et al.). The assessment the patterns of error revealed that students whose first language was not English lost more points in this category than native English speakers. Accordingly, the STEM instructor decided to remove the criterion.

The renaming of the levels of achievement and the addition of an intermediary level of achievement allowed for greater precision in the evaluation of each component of students' compositions. Likewise, keeping in mind the NCTE's Standards for Assessment in Reading and Writing, particularly standard 3 (“[t]he primary purpose of assessment is to improve teaching and learning”) and standard 4 (“[a]ssessment must reflect and allow for critical inquiry into curriculum and instruction”), writing center representatives suggested a revision to the rubric that better reflected the curriculum of the course and the expectations for discourse; this rubric, after all, serves multiple purposes, acting not just as a means of assessment, but also as a pedagogical tool (Johnston, et al.). After writing center representatives evaluated the STEM instructor’s feedback and discussed the grading process with the instructor, all parties recognized the need for gradations in the assessment document, and thereby revised the binary categories of adequate and inadequate.

Finally, the descriptions to the proficient (formerly adequate) and novice (formerly inadequate) levels of achievement were revised to articulate more clearly the instructor’s desired areas of focus for student writing.

Discussion

Our study influenced the relationship between the writing center on our campus and STEM faculty members in several ways. First, writing center representatives were awarded funds through the Hedrick Program Grant associated with the university’s Center for Teaching and Learning to further study the influence of writing center interventions in STEM classes. In pursuit of this goal, the Writing Center provided three tutors with additional training in STEM writing conventions and edited its online appointment system to allow STEM students to make appointments with the tutors who received the additional STEM training. Additionally, these tutors visited 13 sections of 6 STEM classes—MTH 140: Applied Calculus, MTH 229H: Honors Calculus with Analytic Geometry I, CS 305: Software Engineering I, GLY 212: Introduction to Field Methods, BSC 322: Principles of Cell Biology, CE 443: Transportation Systems Design—during the Fall 2016 semester to continue their interventions with STEM faculty and students. During the Spring 2017 semester, the tutors who visited STEM classes the previous semester created pedagogical materials for faculty and teaching assistants in STEM fields. These materials included one-page tip sheets on crafting assignments and rubrics that make clear discipline-specific writing expectations, sample assignment sheets, sample rubrics, and sample lesson plans for teaching writing in the disciplines.

The study also introduced new areas of research regarding rubrics. An unresolved issue in the STEM faculty member’s final rubric (see Table 4) concerns the overlap in criteria as related to error reporting: while criteria were renamed or deleted and levels of achievement were revised, in the final rubric errors related to definition and analysis still fell under two criteria in the rubric: knowledge demonstrated and completeness. The overlap allowed the STEM faculty to deduct points from either or both criteria for a single error. Analysis of when and why deductions were applied to both criteria would make for a useful additional study of grading and rubrics.

Conclusion and Further Research

Our study supports our belief that writing center intervention in STEM classes benefits STEM faculty and, ultimately, STEM students through the revision of course materials. In this case, writing center intervention aided a STEM faculty member in revising an assignment rubric so that he could better convey to students his expectations for their performance on class writing assignments.

On our campus, the WAC program provides support to classes labeled “writing intensive” (or WI). While WAC aids faculty teaching writing intensive courses on our campus, there is little support for faculty teaching courses that require small amounts of writing in their courses. In this particular intervention, the writing center operated as separate arm of WAC program for a course (not formally affiliated with WAC) that required small amounts of writing. The writing center, though often discussed and marketed as a student support service, can fill a gap by providing this type of support to faculty, where feasible. Generalist writing center tutors who are trained to tutor students in writing across the disciplines are particularly well equipped to provide feedback regarding the composition of assignments and rubrics to faculty in the disciplines. This case study showed that writing center intervention can benefit student writing by aiding faculty articulate discipline-specific values in their own written assignment sheets and rubrics.

All courses require that students articulate their knowledge of course material through language, and knowing a discipline’s content isn’t enough to result in student success: student also must have the resources to interpret the genre in which they are writing. In general education composition courses, students are taught how to analyze a text by evaluating a text's genre, audience, purpose, and rhetorical situation. These analytical skills are crucial for students as they compose in a variety of disciplines. These transferrable skills, though, are dependent upon the clarity and precision of an instructor's written assignment sheets and rubrics.

Embedded tutoring may not be feasible for all writing centers or all STEM classes, but this study demonstrates that writing center intervention does benefit STEM faculty and students by promoting the improvement of teaching and learning. This case study provides an example of transformation that can come from writing center intervention, if the writing center has the resources and ability. For campuses where writing centers lack resources to provide specific support to individual courses, faculty can use this study to consider their own rubrics and independently revise for precision and clarity.

Acknowledgments: We would like to thank Dr. Mitchell Scharman for his contributions to the study.

Works Cited

Dvorak, Kevin, Shanti Bruce, and Claire Lutkewitte. Getting the Writing Center into FYC Classrooms. Academic Exchange Quarterly, 2012, pp. 113-19, http://rapidintellect.com/AEQweb/5243NEW.pdf. Accessed 13 Feb. 2017.

Fahnestock, Jeanne. Rhetoric of Science: Enriching the Discipline. Technical Communication Quarterly, vol. 14, no. 3, 2005, pp. 277-86, http://tandfonline.com/doi/abs/10.1207/s15427625tcq1403_5. Accessed 10 Feb. 2017.

Hall, Emily, and Bradley Hughes. Preparing Faculty, Professionalizing Fellows: Keys to Success with Undergraduate Writing Fellows in WAC. The WAC Journal, vol. 22, Nov. 2011, pp. 21-40, http://wac.colostate.edu/journal/vol22/hall.pdf. Accessed 10 Feb. 2017.

Hammersley, Dory, and Heath Shepard. Translate-Communicate-Navigate: An Example of the Generalist Tutor. The Writing Lab Newsletter, vol. 39, no. 9-10, May 2015, pp. 18-19, wlnjournal.org/archives/v39/39.9-10.pdf. Accessed 1 Feb. 2017.

Hannum, Dustin, Joy Bracewell, and Karen Head. Shifting the Center: Piloting Embedded Tutoring Models to Support Multimodal Communication Across the Disciplines. Praxis, vol. 12, no. 1, 2014, http://praxisuwc.com/hannum-et-al-121/. Accessed 7 Feb. 2017.

Johnston, Peter, et al. Standards for the Assessment of Reading and Writing NCTE/IRA Joint Task Force on Assessment. National Council of Teachers of English, 2009, http://ncte.org/standards/assessmentstandards/taskforce. Accessed Feb. 9, 2017.

Kinkead, Joyce, et al. Situations and Solutions for Tutoring Across the Curriculum. Writing Lab Newsletter, vol 19, no. 8, April 1995, pp. 1-5, http://wlnjournal.org/archives/v19/19-8.pdf. Accessed 7 Feb. 2017.

Martin, J.R. A Contextual Theory of Language. The Powers of Literacy: A Genre Approach to Teaching Writing, edited by Bill Cope and Mary Kalantzis, Falmer, 1993, pp. 116-36.

North, Stephen M. The Idea of a Writing Center. College English, vol. 46 , no. 5, Sept. 1984, pp. 433-46, http://jstor.org/stable/377047. Accessed 9 Feb. 2017.

Ryan, Holly, and Danielle Kane. Evaluating the Effectiveness of Writing Center Classroom Visits: An Evidence-Based Approach. Writing Center Journal, vol. 34, no. 2, 2015, pp. 145-72, http://jstor.org/stable/43442808. Accessed 10 Feb. 2017.

Spigelman, Candace, and Laurie Grobman. On Location in Classroom-Based Writing Tutoring. On Location: Theory and Practice in Classroom-Based Writing Tutoring, edited by Candace Spiegelman and Laurie Grobman, U State UP, 2005, p. 10.

Unsworth, Len. ‘Sound’ Explanations in School Science: A Functional Linguistic Perspective on Effective Apprenticing Texts. Linguistics and Education, vol. 9 , no. 2, 1997, pp. 199-226, http://sciencedirect.com/science/article/pii/S0898589897900139. Accessed 8 Feb. 2017.

Walsh, Lynda. The Common Topoi of STEM Discourse: An Apologia and Methodological Proposal, with Pilot Survey. Written Communication, vol. 21, no . 1, 2010, pp. 120-56, http://journals.sagepub.com/doi/abs/10.1177/0741088309353501. Accessed 8 Feb. 2017.

When Rubrics Need Revision from Composition Forum 40 (Fall 2018)

Online at: http://compositionforum.com/issue/40/rubrics.php

© Copyright 2018 Anna Rollins and Kristen Lillivis.

Licensed under a Creative Commons Attribution-Share Alike License.

Return to Composition Forum 40 table of contents.