Composition Forum 25, Spring 2012

http://compositionforum.com/issue/25/

Placing Students in Writing Classes: One University’s Experience with a Modified Version of Directed Self Placement

Abstract: This article discusses our university’s attempt to analyze whether our system of First Year Writing placement serves the needs of our diverse student body. The theory behind Directed Self Placement (DSP) is appealing, so our program adopted a modified version of it, and after several years, decided to evaluate it quantitatively. The authors, a First Year Writing professor and a psychologist trained in statistical analysis, teamed up to gather and analyze data. We sorted our sample by course grade, standardized test scores, gender, race, and prior course grades, running regressions and searching for correlations between these data and our DSP matrix. Our research shows that DSP in the modified form of our placement matrix does not predict student success as measured by First Year Writing grades as well as simple standardized test scores do. Though placing students using test scores alone has limitations, we conclude that DSP, at least in the simplified form in which we use it, does not correct for these limitations, and therefore is not preferable.

Introduction

Our campus is a regional branch of a large state university system. It finds itself with two competing goals, with First Year Writing poised at their intersection. The B.A. and B.S degrees that our university confers do not identify our regional branch, and thus are expected to meet the standards of the flagship institution. At the same time, we are under pressure to increase enrollment and to serve the needs of the local students, and thus we are a de facto open enrollment campus. We take pride in making educational opportunities available to a wide range of students, yet for our degree, and therefore our institution, to maintain its accreditation and reputation, we must also demonstrate that the education of our graduates is comparable to that of our other system schools.

First Year Writing plays a crucial role in balancing these competing interests. One of its goals is to introduce students to collegiate ways of thinking, so that all students finish the first year ready to be college students. Those students who need extra time or help reaching this goal, then, need to spend more time in First Year Writing. Though none of what we have said so far is new, it raises problems that are still unresolved. How can students from widely diverse backgrounds, with extremely uneven degrees of preparation, all wind up “on the same page” in a semester, or even a year? Though we make placement decisions with the best of intentions, to what extent do they merely duplicate uneven prior educational opportunity? We admit students who come nowhere near “making the cut” at the flagship institution, yet if we don’t transform them in four years into the rough equivalent of those students, then we are taking their money and time under false pretenses. While it’s true that “[f]inancial concerns, image, and reputation are often the driving forces behind decisions that affect what actually takes place in education” (Singer 102), we cannot, in good conscience, ignore the role that race plays in this uneven preparation, and thus in placement and subsequent student success. First Year Writing needs to own its role in the sorting process we’ve been describing. In order to make ethical placement decisions, we need to know whom we are sorting—how and why.

Placement of students in First Year Writing is a contentious issue, which has received generous scholarly attention. The goal of placement is to determine which level of writing class will be most helpful to any given student, ensuring he or she receives all the writing preparation needed, without wasting the student’s time and money in courses that aren’t personally necessary. This goal is notoriously elusive, though. As Rich Haswell laments, “When research has compared placement decisions with the subsequent student performance in the course, good match is rarely if ever found” (3). In an essay about economic issues in Basic Writing placement, Ira Shor notes that “[l]iteracy and schooling are officially promoted as ladders to learning and success but are unequally delivered as roads to very different lives depending on a student’s race, gender, and social class” (31). His point is obviously not that we place students based on these indicators, but rather that unfairnesses early on in people’s lives precondition their performance to reflect these indicators, and our placement methods need to acknowledge that difference. In our university’s case, the pressures on the institution to meet the competing goals outlined above mean that many minority and underprepared students are admitted, but far fewer graduate. First Year Writing placement can’t solve the problems of economic inequality, but we do need to attend to them in order to educate as many of our students as well as we possibly can.

One of us heard a very disconcerting comment at a composition conference not long ago. “We spend so much time, energy, and money trying to place students in the appropriate level of First Year Writing,” an audience member observed, “When it could really come down to a bathroom test. Ask new students how many bathrooms there were in their childhood home. If there was one bathroom, place them in Remedial Writing. If there were two, place them in the first semester of a year-long program. If there were more than two, place them directly into the second semester.” The nervous laughter that followed this comment reveals much about what First Year Writing hopes to do and about the challenges we face on the way there. We know that students need writing instruction in college, that most need a course that will help them transition into collegiate expectations and structures, and that many need extensive grammar and literacy instruction. How can we know that our program is making placement decisions based on the real needs of each student, rather than on arbitrary, class-marked, or simply irrelevant criteria?

This article discusses our university’s attempt to address this troubling question, within the larger context of quantitative scholarship on Directed Self Placement (DSP). In 1999, our University’s Writing Program adopted a modified version of DSP, based loosely on the principles outlined in Daniel Royer and Roger Gilles’s 1998 article in CCC entitled “Directed Self-Placement: An Attitude of Orientation.” In 2009, the chair of the English Department asked for a statistical analysis of the program’s effectiveness as part of a university-wide assessment initiative. With a very limited budget (no funding was provided to do this research) the department decided to equate students’ success with their assigned grades. Brian Huot has argued that both standardized tests and classroom grading measure similar social and environmental factors (social class, access to privilege, preparation) more than they measure writing ability (167). He encourages those evaluating student success to consider other factors, including student and teacher satisfaction. Asao Inoue agrees, and suggests that a review of portfolios by faculty other than the teacher-of-record adds another layer of evaluation, more meaningful than mere grades. There are very good points, but on a tight budget (rapidly diminishing to nothing) they are not available options for us.

Furthermore, we feel comfortable using grades to evaluate our sample since the philosophical disconnect between the predictive power of standardized tests and the developmental story (Ed White) of actual students in writing classes implies that a good First Year Writing experience makes success available to students who were not, so to speak, predetermined for it. And that possibility is what gets most writing instructors through each day—the sense that what we are doing genuinely has the power to give students another chance. When a writing instructor assigns final grades, those grades reflect this process. In practice, we do not base final grades on a standardized evaluation of a product (essay) detached from the context of its production. While acknowledging that many freshmen fail courses for reasons other than poor performance (attendance, attrition, etc.), we studied assigned grade distributions (see Results Section, Figure 1) and found that a substantial number of students received some form of A or B. Students who fail for non-attendance receive a special grade, so students appearing on the “regular” roster are being evaluated for their classroom performance and/or for their written work. We concluded that if we eliminated non- and irregular-attendance failures, and ran our statistical analyses on the remaining students, we would have a reasonable, cost-effective measure of student learning.

A good grade in First Year Writing does not necessarily indicate mastery of, or even competence in, writing, but it certainly implies it. Writing instructors in our program receive minimal direction on what each course should cover, how to teach it, how to assess it, or on what to base grades. Our most explicit policy is that a grade in W130 should indicate readiness (or lack thereof) for W131. Unlike many other institutions, ours has no system in place by which to assess student writing competency other than assigned grades. We understand that this absence does not represent “best practice” in the field of First Year Writing, yet we contend that statistical evaluation of what we do have may shed light on what happens at our institution, and on others that are similarly situated.

Before we describe our methods and begin to analyze our data, we consider three key points from the literature about DSP. First, Royer and Gilles’s influential 1998 article, which introduced the concepts and goals of Directed Self Placement to a wide and interested audience, argues that students are best able to evaluate their own writing skills. They note that students select courses based on “behavior and self-image” and they add that “[i]t seems right to us that our students are selecting ENG 098 because of their own view of themselves” (62). This strategy may work for some students, or even some institutions. We wonder, however, what the evidence is that students in general can assess how their skills compare to those of their new, university-level peers. Self-placement assumes that students know more about their own writing than either standardized tests or experienced readers would. Yet what students know is how well they performed relative to other members of their high school classes, not relative to the people matriculating with them at college. Thus, they may have excellent knowledge of their own background, without any relevant context in which to use personal experience to make a class placement choice. As educators, we need to consider whether students’ placement decisions reflect ability and skill, or whether they measure confidence and comfort with academic settings instead, which are uncomfortably close to gender, race, and class identifications.

Second, scholars debate the value of statistical and other quantitative measures in assessments of student success. One remark on the Writing Program Administrators listserv indicates that “[w]e know some valuable things that resist measurement” as part of a defense of DSP. Yet Neal and Huot, in the conclusion to their 2003 volume Directed Self Placement: Principles and Practices, indicate that we need more quantitative analysis of DSP in order to make the ethical claim that “the tools we use work in a manner that aids and encourages the educational process for the students it affects” (249). Now that DSP has been in place for a significant number of years, we need to be willing to re-evaluate its effectiveness, based on statistical and other concrete evidence. True, real-life experiences sometimes “resist measurement,” but being sensitive to that circumstance is not the same as letting intuition override evidence.

Finally, how might we use what we find to make meaningful change in departments? Going back to the “bathroom test,” there are just some things we “know” about students. Based on research, and on countless years of classroom experiences, we simply “know” that many students need one full year of writing instruction. What meaningful role can this empirical knowledge play as we begin to analyze data and use it to help inform WPAs, administrators, and real teachers in real classrooms? When one of us asked colleagues informally about whether they used DSP in their schools, and whether they could demonstrate its effectiveness, I received interesting responses. Often people note that the benefits of DSP outweigh their lack of proof that it is working. One respondent remarked that, though he found no, or negative, correlation between DSP and the writing test they had used previously, “our administrators are in love with the DSP concept—it virtually eliminated student and parent complaints—it is here to stay, at least for the time being.”{1} And the professor in charge of writing placement at our college said that, to the extent that any numbers we ran indicated fewer students should take a full year of freshman writing, she didn’t want to hear about it. These comments do more than just ignore the insights offered by statistical analysis. They also insist that learning happens with students in real classrooms, in real lives, that no test or evaluation accurately measures.

Therefore, we set out to examine a couple of questions. First, would the Modified-DSP show relationships to First Year Writing grades that matched or exceeded those associated with more traditional placement tests (i.e., SAT and ACT)? Second, even if the modified-DSP did not prove a better predictor of grade-related outcome, could it still improve our ability to predict classroom performance if added to more traditional placement test scores? As we examined the results of our data analyses, two additional questions arose. Our third question, then, was whether we could suggest a clear guideline for assigning students to freshman writing classes in the future? This, in turn, raised a fourth question: If we followed this data-based rule, what impact would this have on the English composition courses at our university?

Method

Participants

A pool of 3450 undergraduate students, admitted to our university between 2005 and 2009 who had taken at least one freshman composition course in the English Department, served as the sample for this research. Students ranged in age from 16 to 67 (M = 22.21, SD = 6.95). The student cohort demonstrated substantial ethno-cultural diversity: 60.5% Caucasians, 19.7% African American, 13.8% Hispanic, 0.9% Asian-American, and 3.7% reported other backgrounds (e.g., various non-U.S. students and First Nations ancestry). The sample was 65.6% female.

Institutional Framework

Our university runs three First Year Writing courses into which we placed students using a modified version of DSP, as reflected in a locally created assessment instrument. We will refer to this instrument as the English Placement Questionnaire (EPQ). For a copy of the instrument and institutionally developed scoring instructions, please see the Appendix. Students report their standardized test scores, record their high school GPA, and then answer several questions about their experience as readers and writers. These responses are then converted into numbers, which then yield a class recommendation. Though this is a recommendation, not a requirement, students often don’t know that. In practice, we thus remove the component of DSP in which students are informed of their choices, evaluate their strengths, and make a decision for which they then take responsibility. Technically, they do have this choice, but our scoring system functions to elide it. Some students then place into W130, for which they receive credit, but no requirements of any major are met. They will then take W131, whether or not they pass W130. Others place directly into W131.{2}

Materials

ACT College Entrance Exam (ACT; ACT Inc. web-page). The ACT test assesses high school students' general educational development and their ability to complete college-level work. In our state, the ACT is not administered automatically by high schools, but a minority of the students take it at their own expense.

SAT Reasoning Test (SAT; College Board web-page). The SAT provides information, primarily on English and Mathematical abilities, to help colleges make student selection decisions. Since May 2005, the SAT has included a writing section. The SAT, including the Writing Section, is administered to all public high school juniors in our state. Therefore, we have scores for almost all traditional aged students.

Procedure

The Information Technology Department at our university provided a de-identified database for use in the current study. The database included demographic information (age, sex, and ethno-cultural identity), ACT scores, SAT scores, EPQ scores, and grades from freshman-level writing courses.

Results

Missing Data

As with any real-world set of data, the current one had missing data. Most of this came from the SAT, ACT, and EPQ scores. Of the students mentioned above, 428 had some form of ACT data, including English (N = 428), Reading Comprehension (N = 426), and Math (N = 428). Very few students (N = 129) took the optional ACT writing test. The majority of students had recorded SAT scores, including Verbal (N = 2341) and Math (N = 2432). A smaller number (N = 1664) also provided SAT writing scores. Now that the writing section is required, more students will complete the SAT writing test in future years. Out of the students listed as part of the database, 2628 took the EPQ instrument. A minority of the students (N = 1363) had W130 grades and the vast majority of students (N = 2830671) had a recorded grade for W131. Thus, the number of participants involved in each subsequent analysis varied as a function of information included in the original database. Furthermore, 1826 of the 2830 students who took W131 did so without enrolling in W130.

Descriptive Statistics

Table 1 contains information describing the data distribution for the possible variables that could serve to predict First Year Writing grades and, therefore, might help provide information to guide placement decisions. Our state’s university systems largely accept students based on SAT scores; this explains the higher rate of students with SAT data. As a largely open enrollment campus, the means for the standardized tests fall below what people knowledgeable with these tests might expect for overall mean (e.g., the national norms for the SAT generally have a mean close to 500, and a standard deviation of approximately 100). The students entering our university fall below this with means on the sub-sections ranging from 445.69 (Writing) to 455.20 (Verbal), with Math (455.05) falling between the two.

| Variable | N | M (SD) for Variable Scores |

|---|---|---|

| ACT English | 428 | 19.18 (4.97) |

| ACT Reading Comprehension | 426 | 20.19 (5.27) |

| ACT Writing | 129 | 7.02 (1.58) |

| ACT Math | 428 | 19.38 (4.68) |

| SAT Verbal | 2341 | 455.20 (87.91) |

| SAT Writing | 1664 | 445.69 (85.17) |

| SAT Math | 2432 | 455.05 (94.85) |

| EPQ | 2689 | 21.73 (3.65) |

Table 1. Number of students with each measure of interest and descriptive statistics (Mean and Standard Deviation) for each variable. The higher N for SAT scores occurred as a result of the state university system’s stated preference for its use.

Grade Distributions for English W130 and W131

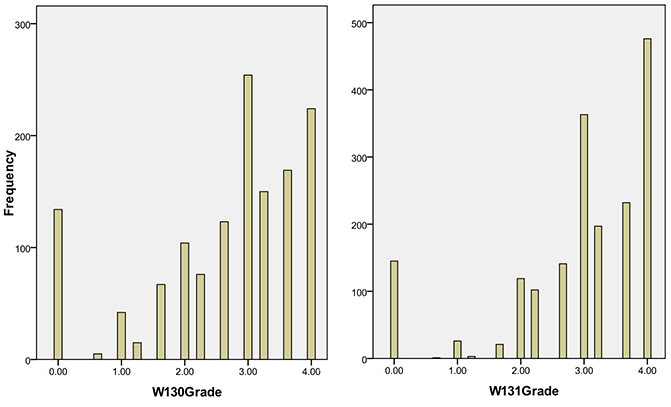

Figure 1 contains grade distributions for our data set of students after transforming the letter grades into numerical equivalents (e.g., A = 4.0, B = 3.0, C = 2.0, D = 1.0, F = 0). As demonstrated in these graphs, the majority of students in W131 earned close to a B on the first attempt with relatively few students receiving low grades; 50.2% of students had grades of B or higher. Although the pattern differed slightly for students in W130, the majority of students had grades of B- or higher. Of the students who took W130 first, 58.5% of the students had a grade of B- or higher. This could serve as initial evidence that students found themselves placed in the correct First Year Writing courses based on the Modified-DSP data as reflected in the EPQ.

Figure 1. Grade distributions for students first enrolling in W130 and W131 as a First Year English class. The vast majority of students, in both classes, earned grades of B and above. For W130 grades: Mean = 2.69; Std. Dev = 1.181; N = 1,363. For W131 grades: Mean = 2.97; Std. Dev = 1.108; N = 1,826.

Correlational Analyses to Examine Relationships Between First Freshman English Course Grades and the Suggested Placement Variables

Table 2 contains information on the relationships between various possible scores that could be used to place students into one of the two First Year Writing courses. For the purposes of data analyses, all hypothesis testing was conducted at p < .001 to indicate statistical significance; this means that if a statistically significant finding appears, it will do so by error less than 1 time in 1000. For English W130, none of the ACT scores showed any significant relationships with classroom performance. The SAT scores, especially the writing score, appeared to serve as a better predictor of English W130 performance. The EPQ failed to show any relationship to classroom performance in W130. This proved surprising since the EPQ score derived partially from student self-evaluation and partially from the SAT Verbal score. This suggests that adding student self-evaluations to other measures actually decreased the EPQ test’s ability to correctly place students.

| Predictor Variable | English 130 (W130) Grade | English 131 (W131) Grade |

|---|---|---|

| ACT English | .18 N = 133 | .30* N = 268 |

| ACT Reading Comprehension | .13 N = 133 | .20 N = 266 |

| ACT Writing | .14 N = 38 | -.02 N = 88 |

| ACT Math | .18 N = 133 | .19 N = 268 |

| SAT Verbal | .14* N = 898 | .19* N = 1381 |

| SAT Writing | .21* N = 663 | .29* N = 903 |

| SAT Math | .13* N = 898 | .20* N = 1382 |

| EPQ | .09 N = 1281 | .16* N = 1218 |

Table 2. Correlations (Pearson r) between possible predictor variables and first semester English grade (W130 or W131, depending on placement). Across the SAT scores, the SAT Writing score showed the strongest relationship to end-of-course grade in both English 130 and English 131. SAT scores evidence strong relationships to the course grade than the EPQ. ACT scores failed to show consistent relationships with grades across both courses, which may simply reflect the lower number of students who took the ACT. Note: * = p < .001.

The pattern of results varied somewhat for English W131. For students who enrolled directly into English W131, the ACT English, but not the ACT Reading Comprehension, ACT Writing, or ACT Math scores, showed a statistically significant relationships with class performance and, thus, could be reasonably used as a predictor of classroom performance for future students. The SAT pattern for English W131 paralleled that for W130, with SAT Writing, SAT Verbal, and SAT Math all showing significant relationships with course outcome. Similarly to the W130 data, the SAT Writing score showed the strongest relationship to W131 course grade. The EPQ results for English W131, in contrast to those for W130, found the EPQ showing a statistically significant relationship to final grade, but at a lower level than the SAT scores.

A Further Test of SAT and DSP Scores to Predict Outcome

At this point, it certainly appeared that the EPQ underperformed each SAT test for predicting classroom performance, and thus the school might drop it in favor of the institutionally less expensive SAT. However, the EPQ might still improve predictions of class performance above and beyond SAT scores and, based on the data available, it remained unclear if the SAT Verbal, SAT Math, or EPQ had the ability to improve prediction of success above and beyond the SAT Writing score. Therefore, a multiple-regression analysis examined if any of these scores could improve the course grade prediction provided by the SAT Writing score for either composition course. In this analysis, the SAT Writing entered the analysis on the first step, the SAT English and Math Scores entered the analysis on the second step, and the EPQ placement test entered the analysis on the third step. Given the relatively low number of students reporting ACT scores, the ACT English score did not enter into the next set of analysis.

The type of regression run here requires the presence of all predictor variables in order to include a student into the statistical analysis. For English W130, this reduced the sample size to 645. None of the scores added on the second two steps [Fchange(2, 641) = 0.69, p > .001; Fchange(1, 640) = 2.10, p > .001] improved the predictions of class performance beyond that based on the SAT Writing Score. For English W131, this reduced the sample size to 644. Again, the SAT Verbal and DSP scores failed to improve on the predictions based on the SAT Writing score. [Fchange(2, 640) = 3.35, p > .001; Fchange(1, 639) = 8.36, p > .001]. The implication, then, was that one sub-test could be used for making future classroom assignments without need for the other two.

What Guidance Can the SAT Writing Score Provide?

According to our data, 39.5% of our students take W130 before taking W131. Practically speaking, all our undergraduate students take W131. Based on the multiple regression analyses reported above, students with an SAT writing score of 480 or better would, on the average, have an expected W131 grade of B or better. And we know from our grade distribution (see above) that slightly fewer than half of the W131 students get below a B, suggesting that B- and below indicate a lower level of student accomplishment. However, if we placed all students with SAT Writing Test scores falling below 480 into W130, then 65.6% of our students would take this course in addition to W131, and we simply don’t have the staff to support the increase in teaching hours that change would represent. Requiring more than 65% of our students to take two semesters of First Year Writing would likely improve their writing, and their education in general. We know this because, in examining our data, we discovered that the only thing in our data set with a stronger relationship, and therefore better ability to predict, than SAT Writing Scores to W131 success was having taken W130. The correlation between W130 and W131 grades was r(1004) = .44, p<.001. Our next step was to explore some of the consequences, statistically and otherwise, of the status quo, and of changing this situation.

If Implemented, What Impact Would the Suggested SAT Writing Score Have on Class Composition?

Under our current system, members of our three primary ethno-cultural groups do not take W130 at rates equivalent to their representation in our overall data set, χ2 (2) = 74.63, p<.001. Currently, both African-American (26.6% of students in W130, 21.5% of students in the three primary ethno-cultural groups) and Hispanic-American (19.5% of students in W130, 15.5% of students in the three primary ethno-cultural groups) students take W130 at higher than expected rates. Caucasian (53.9%, 63.0% of students in the three primary ethno-cultural groups) students take this course at lower than expected rates. Overall, 49.2% of African-American Students, 49.8% of Hispanic students, and 33.9% of Caucasian students currently enroll in W130. Part of this may be due to differences in preparation for college-level course work. On the SAT writing test, for example, the three groups show statistically significant differences, F(2, 1508) = 51.02, p<.001. An F-test does not tell us which groups differ, only that some or all of the groups differed; therefore, we conducted post-hoc testing to determine which groups differed significantly from each other. Post-hoc Tukey B analyses indicated that all ethno-cultural groups showed scores that differed from each other, with African-American students showing the lowest scores (M = 401.56, SD = 71.15), Hispanic-American students falling in the middle (M = 423.05 SD = 87.07), and Caucasian students showing the highest group scores (M = 459.20, SD = 83.33).

Currently, 39.7% of students enroll in W130. If we were to place students into W130 based exclusively on SAT Writing test scores of 470 or lower, the percentage of students who take W130 would increase substantially to 65.6% of enrolled students. The percentages would change dramatically for the three primary ethno-cultural groups. The percentage of African-American and Hispanic students taking the course would increase dramatically (84.9%, 74.1%) and drop slightly for Caucasian students (59.9%). The percentage of students in the future classes who are African American would decrease from 26.6% to 15.9%. The percentage of Hispanic students would increase from 19.5% to 21.1%. The percentage of White students would increase from 53.3% to 63%.

Using the same cut-score for all groups only makes sense if the correlations between SAT Writing and W131 success would have proved similar across all three groups.Evidence for this came from the fact that although the correlations appeared somewhat different for African-American [r(267) = .31], Hispanic [r(118) = .19], and Caucasian [r(618) = .22] students, z-scores indicated that none of these groups differed from each other. The largest z-score, and therefore the strongest evidence for a difference in relationship, came when comparing African-American and Caucasian students (z = 1.32, p > .05), but it did not reach statistical significance. Thus, creating separate groups based on ethno-cultural identity received no statistical support in the current research.

Discussion

We began this research by asking whether our EPQ instrument worked—whether it placed students into courses where they did well. Institutionally, this question came up in response to policies offered by peer institutions that allow students to pass out of First Year Writing if their standardized test scores exceed a selected value. As an English Department, we need to demonstrate that requiring students to take more coursework than they would take at peer institutions benefits them appreciably, in order to justify a policy that might cost us students. In doing the research, though, what we found is that the SAT Writing test and many SAT and ACT scores predicted success more accurately than our EPQ. This proved surprising since the EPQ included SAT Verbal scores as one component of its calculations. So, in fact, our department takes something that works and makes it less effective. Previous scholarship, however, challenges what it means when a test “works,” since an instrument can be valid and effective, but still empty if it can’t “support and document purposeful, collaborative work by students and teachers” (Moss 110). Moss argues that standardized tests flatten out educational experiences, and they do so unevenly, with disadvantaged, minority students paying the higher price (113-4). If our EPQ corrected for this imbalance, that would be a strength. At our institution, however, the evidence indicates otherwise.

When DSP was designed, one premise was that students are more informed about their strengths and weaknesses than teachers who haven’t even taught them yet, and thus they are more qualified to place themselves. While this premise appears to give students important control, it also enables them to make decisions “centered on behavior and self-image—not test scores or grades” (Royer 62). Our data suggest that measures like “behavior and self-image” may be subtly influenced by race and other factors. Maybe non-white students, intimidated by college and subtly influenced by generations of covert racism, are less likely than white students to give themselves credit for their skills and background. Since DSP became widespread, researchers have questioned whether certain groups of students might under- or overestimate their own writing skills (these are well-summarized by Reynolds, especially 82-91). Several studies show that students with “strong self-efficacy about their ability to write well did write well” (Reynolds 87) and that students who are apprehensive about their writing ability are more likely to be weak writers (89). Further, as Ed White and others have shown, success in First Year Writing—even a good grade in First Year Writing—reflects circumstances not measured by standardized tests taken in high school. Yet in our sample, where most passing students receive a B or better in W131, the set of behavioral and self-efficacy markers that allow you to place directly into W131 appeared relatively more available to white students than to non-white ones. Further, an instrument which takes self-confidence into account turns out not to predict which students will fall within that large, successful majority of students any better than a standardized test placement would, and appears to do a far worse job in this task.

There are problems with standardized tests (see FairTest for one summary). But at least for our sample, EPQ doesn’t solve them. Further, we looked in vain for evidence in the literature about DSP proving that it had solved these problems in other contexts. Whether or not it seems like a good idea, we see no statistical evidence that it is more reliable than using test scores—the idea it supplanted.

Conclusion

Our research shows that DSP in the modified form of our placement matrix does not predict student success as measured by First Year Writing grades as well as simple standardized test scores do. Yet DSP appeals to scholars and departments because it appears to solve real problems associated with other forms of placement, including those based on test scores. Ed White argues that placing students into leveled writing courses is crucial, but the tests we use to do so “tend to be a social sorting mechanism” (138), reflecting parental income more than any other factor. He believes DSP, if done well, is preferable to placement by test score, which he compares to Wal-Mart in that the information standardized tests provide is cheap and convenient, “but on many, perhaps most campuses, the information will be meaningless or worse” (139). Are there campuses, however, in which test scores will work? Certainly, our data indicates that at our school, they work better than DSP does and the SAT Writing exam appears to work equally well across groups. In order to understand why DSP does not serve our student population well, we need to explore the connection between student confidence and student success. Yet confidence is elusive, subjective, and very hard to quantify or study. Research by an educational psychologist disturbs the link between confidence and competence, noting that generalized confidence is fairly equal by gender, but specific tasks are more unbalanced, since “the present investigation suggests that the problem may not be that women necessarily lack confidence but that, in some cases, men have too much confidence, especially when they are wrong!” (Lunderberg 120). Thus, we can’t assume a relationship between confidence and ability, if only because factors like gender affect how confident people feel.

The University of Michigan has addressed the troubling issue of confidence’s uneven role in DSP by having incoming freshmen write a sample academic essay, and then answer questions about their writing experience. Before orientation, students read a ten-page article, write a 750-900 word essay in response to it, and then answer follow-up questions about that writing experience (See their page on Directed Self Placement). {3} Though this scheme does not eliminate the problem that student confidence does not correlate well with student ability, it does focus student self-assessment on a specific and recent task. No one in the university infrastructure needs to read or evaluate these essays; their purpose is to give students a specific task and then ask them how they think they did. This strategy narrows the scope of the self-assessment in part as a means to limit how confidence influences broader claims about skills and ability.

Michigan’s revision of DSP retains the strengths of the concept, while attempting to address one of its limitations, and longitudinal analysis of its success may reveal that it reduces the “wild card” factor of student confidence. As Luna observes, self-confidence in student writing may emerge when “high school students become reflective thinkers able to use self-evaluation to guide their learning” (391), but this process must first be taught and practiced. Most students entering our college do not receive this preparation in high school. Standardized tests, for all their biases, may provide a more fair measure of our particular students at this particular time. Ten years ago, our university agreed to fund the EPQ program, since our writing faculty argued that it represented the best practices in the field. When, upon review, we determined it was less accurate that the simpler and cheaper test score placement, and that it targeted minority groups unfairly, our administration chose to revert to a test score method, rather than fund another DSP modification. In the short term at least, we had lost their confidence.

Yet we believe that our university’s response to our analysis is not only bad news. True, DSP as a concept lost credibility to the extent that we could not muster financial support to revise our EPQ and try again. But our data showed enough points of strong correlation to draw two conclusions: students who take W130 are more likely to do well in W131, and students with high SAT writing scores do well in W131, whether or not they take W130. We concluded that our EPQ encouraged many students to skip W130 when it would have benefitted them greatly to take the course. We used this information to gain institutional support for increasing the number of W130 sections, and placing a higher number of students in a year’s worth of First Year Writing. Analysis of our study results causes us to agree with Peter Elbow, who points out “the stranglehold link in our current thinking between helping unskilled writers and segregating or quarantining them into separate basic writing courses” (88). Our university agreed to place more students into W130, since our data reveals that W130 enrollment predicts W131 success, and since Elbow notes that heterogeneous instruction can help all students achieve. We plan to eliminate the EPQ, since its reliance on confidence does not serve our particular students well, and to choose an SAT writing score that will increase enrollment in W130, though not beyond what the department can reasonably staff. We plan to follow-up on our results each year, to determine whether our students fare better under this system.

Appendix

The appendix is a handout, so it is delivered as PDF only.

English Placement Questionnaire (EPQ) and Score sheet for English Placement Questionnaire (PDF)

Notes

-

Quoted from an e-mail to the WPA listserv. (Return to text.)

-

The lowest scoring students are placed into W030, which is a non-credit, basic study skills course designed to prepare struggling students to take W130. We did not collect or analyze data on this course, which is taken by a very small number of students. (Return to text.)

-

Since these changes are recent, there is no data demonstrating their effectiveness, but the program is in the process of collecting and analyzing such data. (Return to text.)

Works Cited

Elbow, Peter. “Writing Assessment in the 21st Century: A Utopian View.” Composition in the Twenty-First Century: Crisis and Change. Ed. Lynn Z. Bloom, Donald A. Daiker, and Edward M. White. Carbondale: Southern Illinois UP, 1996. 83-100. Print.

Haswell, Rich. “Post Secondary Entrance Writing Placement.” <http://comppile.org/profresources/placement.htm>. Web. 18 Jan. 2011.

Huot, Brian. “Towards a New Discourse of Assessment for the College Writing Classroom.” College English 65.2 (Nov. 2002): 163-80. Print.

Inoue, Asao B. “Self-Assessment As Programmatic Center: The First Year Writing Program and Its Assessment at California State University, Fresno.” Composition Forum 20 (Summer 2009). Web. 15 Mar. 2011. <http://compositionforum.com/issue/20/calstate-fresno.php>

Luna, Andrea. “A Voice in the Decision: Self-Evaluation in the Freshman English Placement Process.” Reading and Writing Quarterly 19 (2003): 377-92. Web. 11 Nov. 2010.

Lundeberg, Mary A., Paul W. Fox, and Judith Puncochaf. “Highly Confident but Wrong: Gender Differences and Similarities in Confidence Judgments.” Journal of Educational Psychology 86.1 (1994): 114-21. Web. 11 Nov. 2010.

Moss, Pamela A. “Validity in High Stakes Writing Assessment: Problems and Possibilities.” Assessing Writing 1.1 (1994): 109-28. Print.

Neal, Michael, and Brian Huot. “Responding to Directed Self-Placement.” Directed Self-Placement: Principles and Practices. Ed. Daniel J. Royer and Roger Gilles. Cresskill: Hampton P, 2003. 243-55. Print.

Reynolds, Erica J. “The Role of Self-Efficacy in Writing and Directed Self Placement.” Directed Self-Placement: Principles and Practices. Ed. Daniel J. Royer and Roger Gilles. Cresskill: Hampton P, 2003. 73-103. Print.

Royer, Daniel J., and Roger Gilles. “Directed Self-Placement: An Attitude of Orientation.” College Composition and Communication 50.1 (Sept. 1988): 54-70. Print.

Shor, Ira. “Errors and Economics: Inequality Breeds Remediation.” Mainstreaming Basic Writers: Politics and Pedagogies of Access. Ed. Gerri McNenney. Mahwah: Erlbaum, 2001. 29-54. Print.

Singer, Marti. “Moving the Margins.” Mainstreaming Basic Writers: Politics and Pedagogies of Access. Ed. Gerri McNenney. Mahwah: Erlbaum, 2001. 101-18. Print.

University of Michigan, Sweetland Writing Center. <http://www.lsa.umich.edu/swc/requirements/first-year/dsp>. Web. 18 Jan. 2011.

White, Edward M. “Testing In and Testing Out.” WPA: Writing Program Administration 32.1 (Fall 2008): 129-42. Web. 11 Nov. 2010.

“Placing Students in Writing Classes” from Composition Forum 25 (Spring 2012)

Online at: http://compositionforum.com/issue/25/placing-students-modified-dsp.php

© Copyright 2012 Anne Balay and Karl Nelson.

Licensed under a Creative Commons Attribution-Share Alike License.

Return to Composition Forum 25 table of contents.